|

Topics

Introduction

Problems Freedom Knowledge Mind Life Chance Quantum Entanglement Scandals Philosophers Mortimer Adler Rogers Albritton Alexander of Aphrodisias Samuel Alexander William Alston Anaximander G.E.M.Anscombe Anselm Louise Antony Thomas Aquinas Aristotle David Armstrong Harald Atmanspacher Robert Audi Augustine J.L.Austin A.J.Ayer Alexander Bain Mark Balaguer Jeffrey Barrett William Barrett William Belsham Henri Bergson George Berkeley Isaiah Berlin Richard J. Bernstein Bernard Berofsky Robert Bishop Max Black Susan Blackmore Susanne Bobzien Emil du Bois-Reymond Hilary Bok Laurence BonJour George Boole Émile Boutroux Daniel Boyd F.H.Bradley C.D.Broad Michael Burke Jeremy Butterfield Lawrence Cahoone C.A.Campbell Joseph Keim Campbell Rudolf Carnap Carneades Nancy Cartwright Gregg Caruso Ernst Cassirer David Chalmers Roderick Chisholm Chrysippus Cicero Tom Clark Randolph Clarke Samuel Clarke Anthony Collins August Compte Antonella Corradini Diodorus Cronus Jonathan Dancy Donald Davidson Mario De Caro Democritus William Dembski Brendan Dempsey Daniel Dennett Jacques Derrida René Descartes Richard Double Fred Dretske Curt Ducasse John Earman Laura Waddell Ekstrom Epictetus Epicurus Austin Farrer Herbert Feigl Arthur Fine John Martin Fischer Frederic Fitch Owen Flanagan Luciano Floridi Philippa Foot Alfred Fouilleé Harry Frankfurt Richard L. Franklin Bas van Fraassen Michael Frede Gottlob Frege Peter Geach Edmund Gettier Carl Ginet Alvin Goldman Gorgias Nicholas St. John Green Niels Henrik Gregersen H.Paul Grice Ian Hacking Ishtiyaque Haji Stuart Hampshire W.F.R.Hardie Sam Harris William Hasker R.M.Hare Georg W.F. Hegel Martin Heidegger Heraclitus R.E.Hobart Thomas Hobbes David Hodgson Shadsworth Hodgson Baron d'Holbach Ted Honderich Pamela Huby David Hume Ferenc Huoranszki Frank Jackson William James Lord Kames Robert Kane Immanuel Kant Tomis Kapitan Walter Kaufmann Jaegwon Kim William King Hilary Kornblith Christine Korsgaard Saul Kripke Thomas Kuhn Andrea Lavazza James Ladyman Christoph Lehner Keith Lehrer Gottfried Leibniz Jules Lequyer Leucippus Michael Levin Joseph Levine George Henry Lewes C.I.Lewis David Lewis Peter Lipton C. Lloyd Morgan John Locke Michael Lockwood Arthur O. Lovejoy E. Jonathan Lowe John R. Lucas Lucretius Alasdair MacIntyre Ruth Barcan Marcus Tim Maudlin James Martineau Nicholas Maxwell Storrs McCall Hugh McCann Colin McGinn Michael McKenna Brian McLaughlin John McTaggart Paul E. Meehl Uwe Meixner Alfred Mele Trenton Merricks John Stuart Mill Dickinson Miller G.E.Moore Ernest Nagel Thomas Nagel Otto Neurath Friedrich Nietzsche John Norton P.H.Nowell-Smith Robert Nozick William of Ockham Timothy O'Connor Parmenides David F. Pears Charles Sanders Peirce Derk Pereboom Steven Pinker U.T.Place Plato Karl Popper Porphyry Huw Price H.A.Prichard Protagoras Hilary Putnam Willard van Orman Quine Frank Ramsey Ayn Rand Michael Rea Thomas Reid Charles Renouvier Nicholas Rescher C.W.Rietdijk Richard Rorty Josiah Royce Bertrand Russell Paul Russell Gilbert Ryle Jean-Paul Sartre Kenneth Sayre T.M.Scanlon Moritz Schlick John Duns Scotus Albert Schweitzer Arthur Schopenhauer John Searle Wilfrid Sellars David Shiang Alan Sidelle Ted Sider Henry Sidgwick Walter Sinnott-Armstrong Peter Slezak J.J.C.Smart Saul Smilansky Michael Smith Baruch Spinoza L. Susan Stebbing Isabelle Stengers George F. Stout Galen Strawson Peter Strawson Eleonore Stump Francisco Suárez Richard Taylor Kevin Timpe Mark Twain Peter Unger Peter van Inwagen Manuel Vargas John Venn Kadri Vihvelin Voltaire G.H. von Wright David Foster Wallace R. Jay Wallace W.G.Ward Ted Warfield Roy Weatherford C.F. von Weizsäcker William Whewell Alfred North Whitehead David Widerker David Wiggins Bernard Williams Timothy Williamson Ludwig Wittgenstein Susan Wolf Xenophon Scientists David Albert Philip W. Anderson Michael Arbib Bobby Azarian Walter Baade Bernard Baars Jeffrey Bada Leslie Ballentine Marcello Barbieri Jacob Barandes Julian Barbour Horace Barlow Gregory Bateson Jakob Bekenstein John S. Bell Mara Beller Charles Bennett Ludwig von Bertalanffy Susan Blackmore Margaret Boden David Bohm Niels Bohr Ludwig Boltzmann John Tyler Bonner Emile Borel Max Born Satyendra Nath Bose Walther Bothe Jean Bricmont Hans Briegel Leon Brillouin Daniel Brooks Stephen Brush Henry Thomas Buckle S. H. Burbury Melvin Calvin William Calvin Donald Campbell John O. Campbell Sadi Carnot Sean B. Carroll Anthony Cashmore Eric Chaisson Gregory Chaitin Jean-Pierre Changeux Rudolf Clausius Arthur Holly Compton John Conway Simon Conway-Morris Peter Corning George Cowan Jerry Coyne John Cramer Francis Crick E. P. Culverwell Antonio Damasio Olivier Darrigol Charles Darwin Paul Davies Richard Dawkins Terrence Deacon Lüder Deecke Richard Dedekind Louis de Broglie Stanislas Dehaene Max Delbrück Abraham de Moivre David Depew Bernard d'Espagnat Paul Dirac Theodosius Dobzhansky Hans Driesch John Dupré John Eccles Arthur Stanley Eddington Gerald Edelman Paul Ehrenfest Manfred Eigen Albert Einstein George F. R. Ellis Walter Elsasser Hugh Everett, III Franz Exner Richard Feynman R. A. Fisher David Foster Joseph Fourier George Fox Philipp Frank Steven Frautschi Edward Fredkin Augustin-Jean Fresnel Karl Friston Benjamin Gal-Or Howard Gardner Lila Gatlin Michael Gazzaniga Nicholas Georgescu-Roegen GianCarlo Ghirardi J. Willard Gibbs James J. Gibson Nicolas Gisin Paul Glimcher Thomas Gold A. O. Gomes Brian Goodwin Julian Gough Joshua Greene Dirk ter Haar Jacques Hadamard Mark Hadley Ernst Haeckel Patrick Haggard J. B. S. Haldane Stuart Hameroff Augustin Hamon Sam Harris Ralph Hartley Hyman Hartman Jeff Hawkins John-Dylan Haynes Donald Hebb Martin Heisenberg Werner Heisenberg Hermann von Helmholtz Grete Hermann John Herschel Francis Heylighen Basil Hiley Art Hobson Jesper Hoffmeyer John Holland Don Howard John H. Jackson Ray Jackendoff Roman Jakobson E. T. Jaynes William Stanley Jevons Pascual Jordan Eric Kandel Ruth E. Kastner Stuart Kauffman Martin J. Klein William R. Klemm Christof Koch Simon Kochen Hans Kornhuber Stephen Kosslyn Daniel Koshland Ladislav Kovàč Leopold Kronecker Bernd-Olaf Küppers Rolf Landauer Alfred Landé Pierre-Simon Laplace Karl Lashley David Layzer Joseph LeDoux Gerald Lettvin Michael Levin Gilbert Lewis Benjamin Libet David Lindley Seth Lloyd Werner Loewenstein Hendrik Lorentz Josef Loschmidt Alfred Lotka Ernst Mach Donald MacKay Henry Margenau Lynn Margulis Owen Maroney David Marr Humberto Maturana James Clerk Maxwell John Maynard Smith Ernst Mayr John McCarthy Barbara McClintock Warren McCulloch N. David Mermin George Miller Stanley Miller Ulrich Mohrhoff Jacques Monod Vernon Mountcastle Gerd B. Müller Emmy Noether Denis Noble Donald Norman Travis Norsen Howard T. Odum Alexander Oparin Abraham Pais Howard Pattee Wolfgang Pauli Massimo Pauri Wilder Penfield Roger Penrose Massimo Pigliucci Steven Pinker Colin Pittendrigh Walter Pitts Max Planck Susan Pockett Henri Poincaré Michael Polanyi Daniel Pollen Ilya Prigogine Hans Primas Giulio Prisco Zenon Pylyshyn Henry Quastler Adolphe Quételet Pasco Rakic Nicolas Rashevsky Lord Rayleigh Frederick Reif Jürgen Renn Giacomo Rizzolati A.A. Roback Emil Roduner Juan Roederer Robert Rosen Frank Rosenblatt Jerome Rothstein David Ruelle David Rumelhart Michael Ruse Stanley Salthe Robert Sapolsky Tilman Sauer Ferdinand de Saussure Jürgen Schmidhuber Erwin Schrödinger Aaron Schurger Sebastian Seung Thomas Sebeok Franco Selleri Claude Shannon James A. Shapiro Charles Sherrington Abner Shimony Herbert Simon Dean Keith Simonton Edmund Sinnott B. F. Skinner Lee Smolin Ray Solomonoff Herbert Spencer Roger Sperry John Stachel Kenneth Stanley Henry Stapp Ian Stewart Tom Stonier Antoine Suarez Leonard Susskind Leo Szilard Max Tegmark Teilhard de Chardin Libb Thims William Thomson (Kelvin) Richard Tolman Giulio Tononi Peter Tse Alan Turing Robert Ulanowicz C. S. Unnikrishnan Nico van Kampen Francisco Varela Vlatko Vedral Vladimir Vernadsky Clément Vidal Mikhail Volkenstein Heinz von Foerster Richard von Mises John von Neumann Jakob von Uexküll C. H. Waddington Sara Imari Walker James D. Watson John B. Watson Daniel Wegner Steven Weinberg August Weismann Paul A. Weiss Herman Weyl John Wheeler Jeffrey Wicken Wilhelm Wien Norbert Wiener Eugene Wigner E. O. Wiley E. O. Wilson Günther Witzany Carl Woese Stephen Wolfram H. Dieter Zeh Semir Zeki Ernst Zermelo Wojciech Zurek Konrad Zuse Fritz Zwicky Presentations ABCD Harvard (ppt) Biosemiotics Free Will Mental Causation James Symposium CCS25 Talk Evo Devo September 12 Evo Devo October 2 Evo Devo Goodness Evo Devo Davies Nov12 |

The Information Philosopher

Watch Our 50 I-Phi Lectures

I’ve spent the last twenty-five years building this website using a web publishing system of my own design that preserves every draft of every page (following a suggestion in 1999 by Tim Berners-Lee), so future scholars who want to follow my development can do so. Press @ to travel the site in time

Over the last seventy years I’ve read many works of philosophers and scientists, marking up their books with my notes and critical comments. For over 500 thinkers, I’ve started web pages (links in the left navigation) on their work including hyperlinks to many hundreds of web pages explaining critical concepts in philosophy and science.

I’ve organized those concepts into about 100 menu items under the eleven drop-down menus above. Using the hyperlinks, you can navigate from thinker to concept and then to related thinkers and their concepts, and so on….

The level of the material is the same as my lectures at Harvard in the 1960’s, which were often a student’s first science course. My pages should easily be understood by those who've taken physics or chemistry in high school.

Although looking at problems through the lens of information can provide new insights, you will find no radical new science in these pages. My quantum physics is the standard “orthodox” theory of Niels Bohr, Werner Heisenberg, Max Born, Erwin Schrödinger, P.A.M. Dirac, John von Neumann, and critically important, Albert Einstein, despite his severe criticism of Bohr and Heisenberg's Copenhagen Interpretation.

I will introduce you to the equations of quantum physics. You will of course need some higher education, even graduate studies, to actually work with the equations. I hope my introductions will help you launch scientific (or philosophical) careers. And I'm available to comment on your work.

Unlike many of my very busy professional colleagues, I answer all my correspondents’ questions, suggestions, and pet theories. As a past software developer, I know that user feedback is critically important for getting things right. Contact me at bobdoyle@informationphilosopher.com.

Although I don't introduce any new physics, I’ve been most fortunate to have had some original ideas, proposed solutions to problems in physics and philosophy. They include the flatness of the universe (1959), the continuous spectrum of the hydrogen quasi-molecule (1968), the cosmic creation process cosmic creation process that appears to violate the second law of thermodynamics (1989), the two-stage model of free will (2010), the quantum origin of irreversibility in statistical physics (2016), the experience recorder and reproducer (ERR) model of the mind (2016), and a causal explanation of quantum entanglement without faster-than-light “action at a distance” (2020).

If you want to learn about my overall career, please see my about page. Below I start with my lifelong search for answers to some big problems in cosmology, physics, biology, and neuroscience.

I entered Harvard in 1958 to study for a Ph.D. in Astrophysics. I came from Brown with an Sc.B in Physics and a question about information I've spent the rest of my life trying to answer.

At Brown I studied with physics department chairman Bruce Lindsay, reading his text Foundations of Physics, written with Yale professor Henry Margenau.

We also read The Nature of the Physical World, written by Arthur Stanley Eddington, whose observations of the bending of light during a 1918 eclipse made Albert Einstein's general relativity and prediction of spatial curvature famous.

Eddington taught me that entropy and the second law of thermodynamics are different from other physical laws, especially the classical mechanics of Newton. Newton's laws are all time reversible, whereas entropy can only increase, its direction providing an "arrow of time."

Besides its time direction, Eddington said entropy had less in common with physical concepts like distance, mass, and force, and more in common with aesthetic concepts like beauty and melody, even life itself. He wrote that entropy was "a measure of the random element in arrangement" and "we faintly suspect some deeper significance touching that which appears in our consciousness as purpose (opposed to chance)." I saw a connection to values!

Cosmologists then saw the origin of the universe as a hot plasma with extremely high density and temperature, in a state of maximum entropy, complete disorder. This puzzled me.

If entropy started at a maximum and can only increase, so the universe is heading toward a so-called "heat death," I asked my astronomy department professors, how is it today we can be having such intelligent conversations about cosmology? How is all our knowledge, all this information, being created?

Only two of the faculty took my question seriously. Chuck Whitney taught me about radiative energy transfer in stellar interiors and suggested I look for possible equations of entropy transfer. David Layzer had also read Eddington.

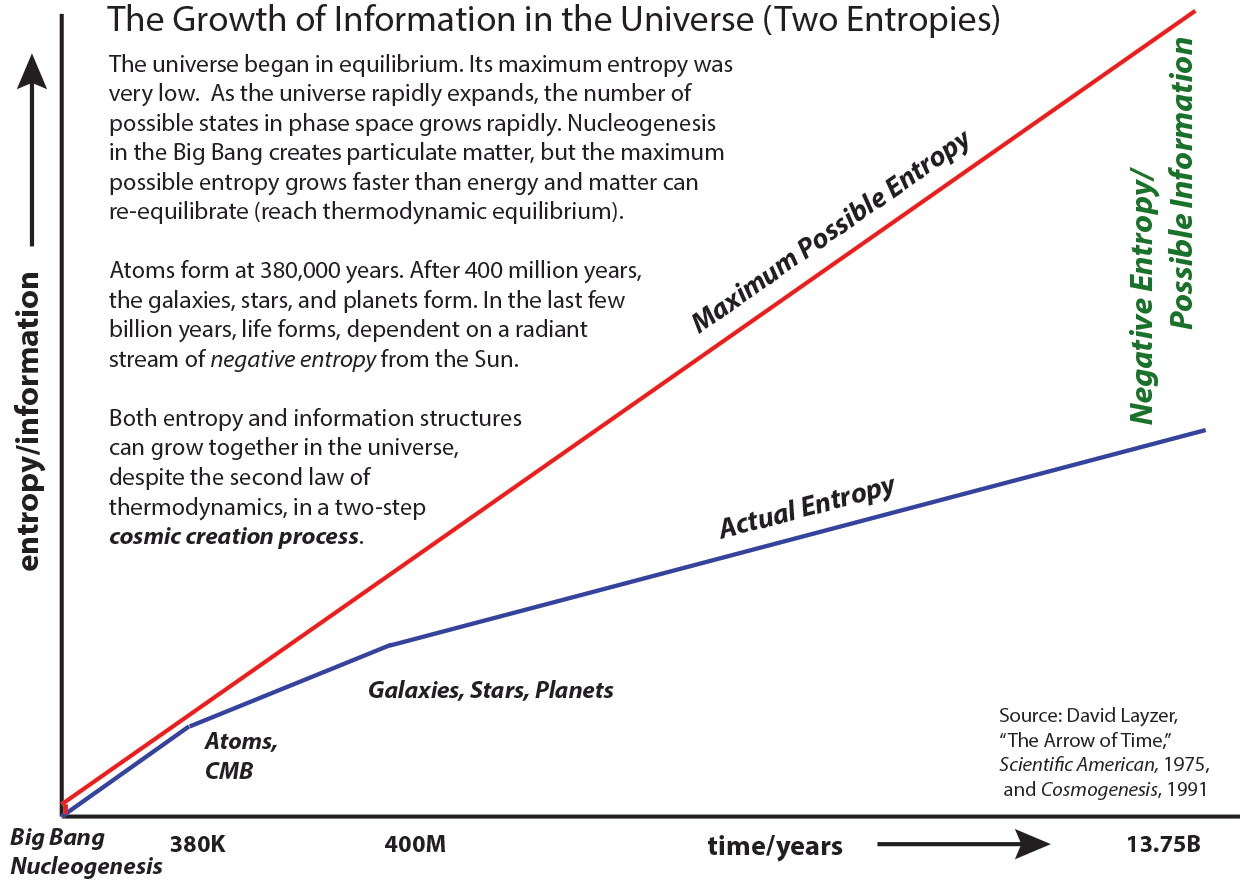

Layzer used Eddington's suggestion (in his 1934 book New Pathways in Science) that the expansion of the universe could generate local reductions in entropy where the formation of planets, stars, and galaxies could occur.

As every student of thermodynamics knows, an irreversible temporal process decreasing the physical entropy locally requires a compensating increase in global entropy to satisfy the second law of thermodynamics.

I'd like to call this Eddington's Law: No Local Entropy Decline Without a Global Entropy Increase

Layzer's book Cosmogenesis: The Growth of Order in the Universe showed how both disorder (entropy) and order (negative entropy) are increasing in the universe. I adapted Eddington and Layzer's descriptions in this diagram to show how actual entropy increases much more slowly than the maximum possible entropy, leaving room for the growth of information.

See Bob's talk on the Brain in Olivier Wright's PSI project on Free Will

See Bob's talk on the Brain in Olivier Wright's PSI project on Free Will

Read Our I-Phi Books

Read Our I-Phi Books

See Bob's iTV-Studio Design

Bob's Desktop Video Group

See Bob's iTV-Studio Design

Bob's Desktop Video Grouphas been "helping communities communicate" for fifty years  Just how this local decrease and accompanying global increase might work in living things was made clear by Erwin Schrödinger in his 1944 classic article "What Is Life?"

Schrödinger showed that a living system must have an external source of negative entropy (or energy some of which is "freely available" to do work) to hold the system at low entropy while the entropy in its surroundings increases.

I'd like to call this Schrödinger's Rule: No Growth Without A Negentropy Source

Schrödinger identified that external source of negative entropy as our Sun. But he did not explain how the universe could create such a Star (at a critical distance from a habitable planet). That explanation belongs to Eddington.

Let's illustrate how a star at a very high temperature can be the free energy source for a planet at the right distance.

Just how this local decrease and accompanying global increase might work in living things was made clear by Erwin Schrödinger in his 1944 classic article "What Is Life?"

Schrödinger showed that a living system must have an external source of negative entropy (or energy some of which is "freely available" to do work) to hold the system at low entropy while the entropy in its surroundings increases.

I'd like to call this Schrödinger's Rule: No Growth Without A Negentropy Source

Schrödinger identified that external source of negative entropy as our Sun. But he did not explain how the universe could create such a Star (at a critical distance from a habitable planet). That explanation belongs to Eddington.

Let's illustrate how a star at a very high temperature can be the free energy source for a planet at the right distance.

Ilya Prigogine won a Nobel Prize in Chemistry in 1977 for his idea that nonlinear thermodynamics is needed to bring order out of chaos. He described complex systems far from equilibriums as "dissipative structures." Today complexity science is the study of such dissipative structures as complex systems.

The behavior of a complex adaptive (CAS) is not reducible to the behavior of its component parts. These parts of a CAS are thought to be interconnected in communicating networks that allow the system to "self-organize" and adapt to changes in its environment. Some relatively simple chemical processes are auto-catalytic. More complex systems, including living things, are called autopoetic or "self-making."

Erwin Schrödinger also explained how genetic information could be stored in the atomic structure of a long molecule or "periodic crystal," which was found to be DNA just nine years later by James Watson and Francis Crick.

Just three years before information in the genetic code was discovered, Claude Shannon formalized the theory of communication of information, describing digital "bits" of information as 1's and 0's (or the Yes and No answers to questions). Shannon said that the amount of information communicated depends on the number of possible messages. With eight possible messages, Shannon says one actual message communicates three bits of information (2³ = 8).

If there is only one possible message, there is no new information. This is the determinist's view that there is only one possible future, both it and the entire past have been completely determined from time zero, and the total information in the universe would be a conserved constant, as many physicists, and some world religions, mistakenly believe.

I'd like to all this Shannon's Principle: No New Information Without Possibilities

John Wheeler proposed a deep relationship between immaterial information and material entropy that he calls "It from Bit." He thinks the "Its" of actual information structures emerge from the "Bits" of possible new information.

Following Shannon, I thought that new information, the emergence of novelty in the universe ("something new under the sun"), would require a temporal process that depends first on the existence of new possibilities and then second on the selection or choice of one actual outcome. First possibilities, then actualities.

I found Shannon's possibilities in the writings of nineteenth-century philosopher William James, especially his 1884 article "The Dilemma of Determinism." My first philosophical publication was titled "A Two-Stage Model of Free Will" in William James Studies. James had seen these two stages in Charles Darwin's 1859 theory of natural selection, first random or chance possible variations , one of which is made actual by natural selection of the variation with the greatest reproductive success.

Since we now know that the only indeterministic chance is quantum chance, and that Einstein discovered this ontological chance ten years before Werner Heisenberg's uncertainty principle.

I'd like to call this Einstein's Discovery of Chance.

I saw such a two-step or two-stage temporal process - first chance possibilities, followed by selection of one actuality - as the essence of the cosmic creation process, and have found it can explain five great problems in science and in philosophy.

They include 1) the two-step process of biological evolution, chance variations or mutations in the genetic code followed by natural selection of those with greater reproductive success.

2) The two-stage model of freedom of the human will, first random alternative possibilities followed by an adequately determined practical or moral choice to make one actual.

3) Claude Shannon's theory of the communication of information also involves these two steps or stages (the Shannon principle). The amount of information communicated depends on the number of possible messages.

If there is only one possible message, like the determinist's one possible future, no new information is communicated. If only one possible future, the future and the entire past have been completely determined from time zero, and the information in the universe would be a conserved constant, as many physicists, and some world religions, mistakenly believe.

4) The "weird" phenomenon of entanglement does not communicate information at faster than light speed as mistakenly thought, but it causes two widely separated quantum particles, each with two random possible states, to collapse into two actual perfectly correlated states.

In their famous 1935 EPR Paradox paper, Einstein and his colleagues Boris Podolsky and Nathan Rosen thought the measurement of one particle must act instantaneously on the other to force its measurement to agree with the first. Schrödinger immediately wrote to Einstein to explain that the two-particle wave function Ψ12, the solution to his wave equation, would collapse into single-particle wave functions Ψ1 and Ψ2 with perfectly correlated properties.

I hope to show that a common cause, a constant of the motion that conserves spin angular momentum, and the spherical symmetry of the wave function can explain the perfect correlations of newly created bits of information at widely separated locations.

5) Our model of the mind as an experience recorder and reproducer stores information about experiences in random possible synapses of neural networks. Neuroscientist Donald Hebb said "neurons that fire together get wired together." The ERR model says neurons that are wired together will later fire together, recalling memories from the "Hebbian assemblies."

A new experience will fire its own neural network dependent on that experience. It will likely overlap many older experiences that resemble the new experience in some ways. Neurons firing in the overlap areas will recall those earlier experience, together with the original feelings. The earlier experiences provide context and meanings for the new experience.

When many earlier experiences "come to mind," William James called it a "bloomimg, buzzing, confusion," but our ERR model sees the many possible meanings as the sources or our creativity and our free will when we make one possible choice actual.

The information in our minds is not stored as digital data, despite the widespread but mistaken idea in cognitive neuroscience that our brains are digital computers. There is no "representation" of our knowledge that could be seen by a neuroscientist or captured in an MRI or MEG (magnetoencephalography) scan.

Ilya Prigogine won a Nobel Prize in Chemistry in 1977 for his idea that nonlinear thermodynamics is needed to bring order out of chaos. He described complex systems far from equilibriums as "dissipative structures." Today complexity science is the study of such dissipative structures as complex systems.

The behavior of a complex adaptive (CAS) is not reducible to the behavior of its component parts. These parts of a CAS are thought to be interconnected in communicating networks that allow the system to "self-organize" and adapt to changes in its environment. Some relatively simple chemical processes are auto-catalytic. More complex systems, including living things, are called autopoetic or "self-making."

Erwin Schrödinger also explained how genetic information could be stored in the atomic structure of a long molecule or "periodic crystal," which was found to be DNA just nine years later by James Watson and Francis Crick.

Just three years before information in the genetic code was discovered, Claude Shannon formalized the theory of communication of information, describing digital "bits" of information as 1's and 0's (or the Yes and No answers to questions). Shannon said that the amount of information communicated depends on the number of possible messages. With eight possible messages, Shannon says one actual message communicates three bits of information (2³ = 8).

If there is only one possible message, there is no new information. This is the determinist's view that there is only one possible future, both it and the entire past have been completely determined from time zero, and the total information in the universe would be a conserved constant, as many physicists, and some world religions, mistakenly believe.

I'd like to all this Shannon's Principle: No New Information Without Possibilities

John Wheeler proposed a deep relationship between immaterial information and material entropy that he calls "It from Bit." He thinks the "Its" of actual information structures emerge from the "Bits" of possible new information.

Following Shannon, I thought that new information, the emergence of novelty in the universe ("something new under the sun"), would require a temporal process that depends first on the existence of new possibilities and then second on the selection or choice of one actual outcome. First possibilities, then actualities.

I found Shannon's possibilities in the writings of nineteenth-century philosopher William James, especially his 1884 article "The Dilemma of Determinism." My first philosophical publication was titled "A Two-Stage Model of Free Will" in William James Studies. James had seen these two stages in Charles Darwin's 1859 theory of natural selection, first random or chance possible variations , one of which is made actual by natural selection of the variation with the greatest reproductive success.

Since we now know that the only indeterministic chance is quantum chance, and that Einstein discovered this ontological chance ten years before Werner Heisenberg's uncertainty principle.

I'd like to call this Einstein's Discovery of Chance.

I saw such a two-step or two-stage temporal process - first chance possibilities, followed by selection of one actuality - as the essence of the cosmic creation process, and have found it can explain five great problems in science and in philosophy.

They include 1) the two-step process of biological evolution, chance variations or mutations in the genetic code followed by natural selection of those with greater reproductive success.

2) The two-stage model of freedom of the human will, first random alternative possibilities followed by an adequately determined practical or moral choice to make one actual.

3) Claude Shannon's theory of the communication of information also involves these two steps or stages (the Shannon principle). The amount of information communicated depends on the number of possible messages.

If there is only one possible message, like the determinist's one possible future, no new information is communicated. If only one possible future, the future and the entire past have been completely determined from time zero, and the information in the universe would be a conserved constant, as many physicists, and some world religions, mistakenly believe.

4) The "weird" phenomenon of entanglement does not communicate information at faster than light speed as mistakenly thought, but it causes two widely separated quantum particles, each with two random possible states, to collapse into two actual perfectly correlated states.

In their famous 1935 EPR Paradox paper, Einstein and his colleagues Boris Podolsky and Nathan Rosen thought the measurement of one particle must act instantaneously on the other to force its measurement to agree with the first. Schrödinger immediately wrote to Einstein to explain that the two-particle wave function Ψ12, the solution to his wave equation, would collapse into single-particle wave functions Ψ1 and Ψ2 with perfectly correlated properties.

I hope to show that a common cause, a constant of the motion that conserves spin angular momentum, and the spherical symmetry of the wave function can explain the perfect correlations of newly created bits of information at widely separated locations.

5) Our model of the mind as an experience recorder and reproducer stores information about experiences in random possible synapses of neural networks. Neuroscientist Donald Hebb said "neurons that fire together get wired together." The ERR model says neurons that are wired together will later fire together, recalling memories from the "Hebbian assemblies."

A new experience will fire its own neural network dependent on that experience. It will likely overlap many older experiences that resemble the new experience in some ways. Neurons firing in the overlap areas will recall those earlier experience, together with the original feelings. The earlier experiences provide context and meanings for the new experience.

When many earlier experiences "come to mind," William James called it a "bloomimg, buzzing, confusion," but our ERR model sees the many possible meanings as the sources or our creativity and our free will when we make one possible choice actual.

The information in our minds is not stored as digital data, despite the widespread but mistaken idea in cognitive neuroscience that our brains are digital computers. There is no "representation" of our knowledge that could be seen by a neuroscientist or captured in an MRI or MEG (magnetoencephalography) scan.

Not a representation but a re-presentation

When a Hebbian assembly fires again, whether stimulated by a new experience or simply by our thinking, it reproduces or re-presents stored information, it recalls or "calls to mind" what we know. But that's not because it is stored as logical 1/0 bits of propositional logic in our neural synapses, as imagined by Warren McCulloch and Walter Pitts in 1943, before a digital computer existed! Our ERR model is purely biological, in no way a machine.

These five examples of solving problems with information creation are why I call myself the information philosopher. I strongly encourage others to go beyond analytic language philosophy and become information philosophers.

It's very important to understand that information philosophy is not the product of a single mind. Critical elements have come from the work of giants whose shoulders I stand on, including Eddington, Schrödinger, Shannon, James, Einstein, Hebb, and Darwin as described above, and physicist Richard Feynman and biologist Ernst Mayr, as described below.

You may therefore need years of graduate and post-graduate education beyond the introductory material in my books and website to help solve these problems. But as I go "beyond language and logic" (using both of course) in the few thousand pages in my website and books, I hope to be around for a few more years to answer your questions about why I see information as more fundamental than language, logic, mathematics, and physics.

You can email me at bobdoyle@informationphilosopher.com.

Note that just as language philosophy is not the philosophy of language, information philosophy is not the philosophy of information. Luciano Floridi is a philosopher of information. He studies the ethical use of information technology, including the spread of misinformation and disinformation. So far, I am the only information philosopher. I hope you will join me.

I use immaterial information and material information structures to explain problems in philosophy and physics. My formal training is in astrophysics and quantum physics, though all my life I've been reading the works of the hundreds of philosophers and scientists whose work I summarize and interconnect, with hyperlinks in the left navigation column.

I picture the quantum wave function Ψ as purely immaterial information. It's the mathematical solution of Erwin Schrödinger's wave equation. Seeing it as pure information may help to clarify what Richard Feynman called the one and only mystery in quantum mechanics.

As Feynman put the one mystery of the two-slit experiment,

"The question now is, how does it really work? What machinery is actually producing this thing? Nobody knows any machinery."We can not, as Feynman says, provide any "mechanism" that guides or forces particles to their observed positions and observed properties. We can describe what happens with extraordinary accuracy, but not "understand" it. "Nobody understands quantum mechanics," he said. But describing the quantum wave function Ψ as pure information may help us to develop an information interpretation of quantum mechanics, which other physicists have attempted. The square of the wave function Ψ2 gives us the exact probabilities of different possibilities, which are objectively real, if immaterial. These multiple possibilities for a property of a quantum object when it is observed and measured bothered Albert Einstein, who wanted physical properties to exist independently of observations (they don't), to be determined by physical conditions in the immediate locality of the object and not "influenced" instantly by widely separated objects (they aren't). Einstein wanted physical "reality" to involve "local" interactions, not "nonlocal" "spooky action at a distance" as he called it. Had Einstein called the entanglement that he discovered in his EPR Paradox "knowledge (or information) at a distance," the last hundred years of physics might have looked quite different. As I see it, Feynman's one mystery is how quantum waves of abstract and immaterial information going through the two slits can cause the motions and create the properties of concrete and material particles landing on the distant screen. Exploring this mysterious influence of immaterial information on material particles will better explain both Feynman's two-slit experiment and Einstein's "spooky" entanglement. Our information explanation of the cosmic creation process shows how the expansion of the universe opened up new possibilities for different possible futures. Some scientists (e.g., Seth Lloyd) think that the total information in the universe is a conserved quantity, just like the conservation of energy and matter. The "block universe" of special relativity and four-dimensional space-time is seen by some as the one possible future that is "already out there." This flawed idea of a fixed amount of information in the universe supports the idea of Laplace's demon, a super-intelligent being who knows the positions and velocities of all the particles in the universe, one who could use Newton's laws of classical mechanics to know all the past and future of the universe. Such a universe is known as deterministic, pre-determined by the information at the start of the universe, or pre-ordained by an agent who created the universe. This conservation of total information since time zero also supports the much older idea of an omniscient and omnipotent God with foreknowledge of the future, which threatens the idea of human free will. Logically speaking, a god can not be both omniscient and omnipotent. If a god can possibly change the future, then he did not actually know it. Unless, in his infinite wisdom, at time zero he already knows the outcome of every quantum chance event to happen at random places and times in the future. In our study of how Albert Einstein invented most of quantum mechanics a decade before Werner Heisenberg, we showed that Einstein saw the existence of ontological chance whenever electromagnetic radiation interacts with atoms and molecules. This means that many future events, like Aristotle's famous "sea battle," are irreducibly contingent. Future events cannot be known until that future time when they either do or do not occur. The statement "the sea-battle will occur tomorrow" is neither true nor false, challenging Aristotle's bivalent logic and the "excluded middle." Does a contingent future mean an omniscient being cannot exist? The indeterminism of quantum mechanics invalidates the idea of physical determinism as well as the idea of an omniscient being. Our work on free will limits indeterminism to the first "free" stage, where it helps to generate alternative possibilities (new thoughts), and our model requires an adequate determinism in the second "will" stage, to ensure that our actions are caused by our motives, desires, and feelings. First "free", then "will." The great scientist and philosopher of biology Ernst Mayr described evolution as a "two-step process" involving chance.

A Two-Step Process in Biology

In his 1988 Toward a New Philosophy of Biology, Mayr wrote...

Evolutionary change in every generation is a two-step process: the production of genetically unique new individuals and the selection of the progenitors of the next generation. The important role of chance at the first step, the production of variability, is universally acknowledged, but the second step, natural selection, is on the whole viewed rather deterministically: Selection is a non-chance process.Charles Darwin most likely knew that ontological chance was responsible for the variations in the genome that lead to new species. But the idea of chance in his time was widely considered to be atheistic, especially by Darwin's wife. It challenged the idea of an omniscient and omnipotent God overseeing Nature.

A Two-Stage Model for Free Will

The great philosopher of mind William James described free will as what I have called a "two-stage" process. He wrote...

And I can easily show...that as a matter of fact the new conceptions, emotions, and active tendencies which evolve are originally produced in the shape of random images, fancies, accidental out-births of spontaneous variation in the functional activity of the excessively instable human brain, which the outer environment simply confirms or refutes, adopts or rejects, preserves or destroys, - selects, in short, just as it selects morphological and social variations due to molecular accidents of an analogous sort.In my 2011 book Free Will, I report over two-dozen other philosophers and scientists who independently invented this two-stage model of free will, decades before and well after my independent idea while a graduate student at Harvard in the 1970's.

A "Two-Bit" Information Explanation for Quantum Entanglement

We examine what information is being created by entanglement and whether and how it is "communicated." We deny extravagant claims about quantum weirdness connecting everything in the universe. But we defend entanglement to distribute the "quantum keys" used to encrypt and decrypt messages, making them perfectly secure against potential eavesdroppers.

Today's standard entanglement studies are based on an experimental apparatus suggested in 1952 by David Bohm to explore the possibilities of "hidden variables" that could explain Albert Einstein's life-long concern about "spooky actions at a distance" and the so-called Einstein-Podoldsky-Rosen paradox of 1935.

Bohm's "hidden variable" proposal described a

"molecule of total spin zero consisting of two atoms, each of spin one-half. The two atoms are then separated by a method that does not influence the total spin. After they have separated enough so that they cease to interact, any desired component of the spin of the first particle (A) is measured. Then, because the total spin is still zero, it can immediately be concluded that the same component of the spin of the other particle (B) is opposite to that of A."Note that Bohm's "can immediately be concluded" would have been said "we immediately know" if Einstein had called it "knowledge at a distance." An apparatus to realize Bohm's proposal would be a hydrogen molecule disassociating into hydrogen atoms heading toward Stern-Gerlach devices that measure their spins as up or down. But Bohm's experiment with material particles was never realized. Today experiments are done with photons and polarizers measuring the photon spin. The quantum mechanics is the same. The spin of an atom can be up or down. An S-G device measurement creates a single bit of information we can call 1 or 0. We traditionally give the experimenters measuring particles A and B the names Alice and Bob. When Alice observes a spin up particle, she gets the bit 1. Instantly, Bob observes a spin down particle and gets the bit 0. These are well-established experimental facts on which to construct a theory of entanglement. Or we can, and we do, develop a principle theory, as Einstein strongly recommended. Once the two particles have separated to a great distance, the naive theory is that one bit of information about Alice's up particle must have been "communicated" to Bob's particle so it can quickly adjust its random spin to be down. Or perhaps, that a "hidden variable" travels at faster than light speed to "act" on particle B, causing its spin to be down. Most simply, perhaps hidden variables are traveling along with the particles carrying "instruction sets" that control their behavior. But all these theories are flawed, leading to claims that entanglement "connects" everything instantly in a "holistic" universe. My Ph.D. thesis at Harvard in the 1960's solved the Schrödinger equation for the wave function Ψ12 of a two-atom hydrogen molecule, exactly what David Bohm proposed in the 1950's to explain "hidden variables." This provided me with a great insight into entanglement. Before a measurement, quantum objects like hydrogen atoms, electrons, or photons have two possible spin angular momentum states. A measurement makes one of the two possible states an actual state. The founders of quantum mechanics described this process in terms that remain controversial a century later. Werner Heisenberg said the new state is indeterministic, with possibilities, one of which comes randomly into "existence." Einstein hoped for a return to classical deterministic physics, in which objects have a "real" existence before we measure them. The quantum mechanical wave function describing a single-particle spin is a linear combination (also called a superposition) of an up state [

Ψ = (1/√2) [

The (1/√2) coefficients are squared to give us the probability 1/2 of the particle being found in either the up or down state. Half the time we find [

Ψ12 = (1/√2) [

Our explanation of quantum entanglement continues here, but you might want to skip over the extensive details below to our notes on getting beyond logical positivism and analytic language philosophy to information philosophy here. You may also see the entries under the Entanglement menu for further details on our proposed solution to the problem of entanglement and hidden variables.We can simplify the notation of the equation above

| ψ12 > = 1/√2) |

Instead of one particle found randomly in an up or down state, we find the two particles half the time in an up-down state and the other half in a down-up state.

Note that each individual particle is still randomly in an up or down state, but the joint condition of opposite spin states is not random at all! The states are certain to be perfectly correlated. Quantum mechanics exactly predicts the outcomes of entanglement experiments. But is this enough of an explanation? Can we find a causal description of what's going on?

The apparatus entangling the two particles sits in the center between Alice and Bob's measuring devices. It is also in the past light cone of their measurements so is the most likely candidate for a contributing cause. We call it the causal center (CC).

Alice ← Causal Center → Bob

Our model of a "common cause" explaining entanglement is a causal chain of events that begins with the local causes in the entangling apparatus that establishes the initial symmetry of the entangled particles. The particles are put into a spherically symmetric two-particle quantum state Ψ12 that Erwin Schrödinger said cannot be represented as a product of two independent single-particle states Ψ1 and Ψ2.

The causal center sends the particles off in the spherically symmetric state with total spin zero to Alice and Bob. This total spin zero is conserved as a constant of the motion.

The final causal interactions in our "causal chain" of events are the two measurements by Alice and Bob, which create two bits of digital information. As long as local measurements are made at the same pre-agreed upon angle their planar symmetry will maintain total spin zero, the particles will have perfectly correlated opposite spin states up-down or down-up, and individual spin states will be randomly up or down. Should measurements at A and B differ by angle θ, perfect correlations would be reduced by cos2θ, as predicted by quantum mechanics.

When Alice measures her quantum particle, let's say up, the two-particle wave function Ψ12 collapses and instantly her distant partner Bob's particle is projected into the correlated down state, conserving total spin. This is not because any actionable information is communicated from Alice to Bob. There is no "spooky action at a distance."

The two bits of information that "came into existence" were not present in the initial entanglement or in the entangled particles as they traveled to Alice and Bob. They were created by the two symmetric measurements.

See more on how a Common Cause, Constant of the Motion, and Spherical Symmetry are needed to produce the perfectly correlated (and random) outcomes of two-particle Bell experiments.

Does entanglement have something to do with the one mystery of quantum mechanics, how the abstract wave function can predict the locations or properties of concrete material particles without any causal power, without any known machinery (as Richard Feynman said), to move them? Yes, but there's more to it.

In the two-slit experiment, Feynman's classic example, there is no obvious mechanical causal chain of events, no "machinery." But in a Bell experiment, there is first a mechanism (a local causal process) that prepares the two particles in a singlet state with total spin zero, second there is conservation of this spin angular momentum state as the particles move apart, and third, the measurements by Alice and Bob are two distinct causal processes that (again locally) create two new bits of perfectly correlated information at widely separated points in space.

No information at all is "communicated" faster than light between Alice and Bob as "spooky action" theories about entanglement suggest. Nor is information traveling faster than light from the causal center to Alice and Bob, carried by the two entangled particles. If the particles are photons, they travel at the speed of light; if electrons or atoms, then below light speed.

The individually random and jointly perfectly correlated results of experiments by Alice and Bob are thus a consequence of three successive events in a causal chain starting with the local causes in the entangling apparatus that establishes the initial symmetry of the entangled particles. The particles are put into a spherically symmetric two-particle quantum state Ψ12 that conserves the total spin angular momentum as a constant of the motion. The spherical symmetry keeps the spins perfectly correlated at all times as the particles travel apart. As long as nothing interacts to disturb them during their travels, symmetric measurements will (locally) measure the spins as still correlated.

The entangling apparatus at the causal center sends the entangled particles off to Alice and Bob, each particle capable of producing one of the two bits of information. Alice and Bob share this information or knowledge "at a distance." But because each of their sequences of successive bits is quantum random, the random sequences provide no meaningful information immediately to Alice or Bob. When they later compare their sequences using ordinary communication channels however, quantum magic happens, as we shall see.

The popular science-fiction idea that entangled particles connect everything in the universe, that telepathic communication of meaningful messages are being exchanged between different galaxies, even some galaxies billions of light years away, is simply nonsense.

Without a common cause coming from a causal center between Alice in Andromeda and Bob (me!) in the Milky Way no "entangling" cosmic connection is possible!

The sad truth about quantum entanglement is that there is nothing at all going from Alice to Bob or from Bob to Alice. What is going from the common cause in the center to both Alice and Bob is the two particles and the spherically symmetric two-particle wave function Ψ12.

When Alice and Bob make their measurements, whoever measures first (in the special frame of reference in which they and the causal center are at rest) collapses the two-particle wave function Ψ12, separating it into a product of two single-particle states Ψ1 and Ψ2.

It is essential that Alice and Bob do not measure at just any angle. They must agree to position their polarizers or Stern-Gerlach devices in the same plane to measure at the same pre-agreed upon angle. Their planar symmetry combines with the spherical symmetry of the wave function to conserve the total spin angular momentum.

Let's look more carefully at Ψ12 and describe what happens using the mathematical foundations of quantum mechanics as formulated by Paul Dirac's "Principles of Quantum Mechanics" (actually one principle, an axiom, and a postulate).

Ψ12 = (1/√2) [

Dirac's principle of superposition describes the particles in a linear combination (or superposition) of |

Quantum Cryptography and Quantum Key Distribution.

Alice's measurement sequences appear to her to be completely random, with approximately equal numbers of 1's and 0's, approaching equality for longer bit sequences, like this.

00010011011110101100011011000001

And Bob's sequence looks to him to be equally random, with 1's and 0's approaching 50/50.

11101100100001010011100100111110

Should Alice send her bit sequence to Bob (over ordinary channels) for comparison, he finds when he lines the bit strings up with one another, they are perfectly anti-correlated. Where Alice measured a 1, Bob measures 0, and vice versa. This is explained by the condition of conserved total spin coming from the causal center, so I call it a common cause.

00010011011110101100011011000001

Although they contain no information, these random but perfectly correlated bit sequences are perfect for use as a one-time pad or "key" for encrypting coded messages. And the sequences have not been "communicated" or "distributed" over an ordinary communication channel. They have been created independently and locally at Alice and Bob in a secure way that is invulnerable to eavesdroppers, solving the problem of quantum key distribution (QKD).

As long as Alice and Bob measure at the same angle, the spherically symmetric wave function with total spin conserved gives them the symmetric result. If their angles differ by θ their results will vary as cos²θ, which the violation of John Bell's theorem and his inequality has shown.

11101100100001010011100100111110

Beyond Logical Positivism and Language Philosophy to Information Philosophy

Answering deep philosophical questions with words and concepts has sadly been a failure. We need to get behind the words to the underlying information structures, material, linguistic, and mental as well as information communication processes between some structures, especially living things.

Although analytic language philosophy is still widely taught, it has made little progress for decades.

Language philosophers solve (or dis-solve!) problems in philosophy by an analysis of language, using verbal arguments with words and concepts. They discover (rediscover) the same ancient problems, forever republishing old concepts with new names and acronyms.

An information philosopher studies the origin and evolution of information structures, the foundations for all our ideas, solving the problem of knowledge.

Information philosophy is a dualist philosophy, both materialist and idealist. It is a correspondence theory, explaining how immaterial ideas represent material objects, especially in the brain and in the mind.

In a deterministic or "block" universe, information is constant. Logical and mathematical philosophers follow Gottfried Leibniz and Pierre-Simon Laplace, who said a super-intelligent being who knew the information at one instant would know all the past and future. They deny the obvious fact that new information can be and has been created.

An information structure is an object whose elementary particle components have been connected and arranged in an interesting way, as opposed to being dispersed at random throughout space like the molecules in a gas. Information philosophy explains who or what is doing the arranging.

A gas of microscopic material particles in equilibrium is in constant motion, the motion we call heat. Some macroscopic properties, like pressure, temperature, volume entropy (and information content), but its total matter and energy, are unchanging. At the origin, it is said to have been in equilibrium and have maximum possible entropy, or disorder. It contained minimal, possibly zero, internal information, apart from information about the structures of the atoms and molecules.

When the second law of thermodynamics was discovered in the nineteenth century, physicists predicted that increasing entropy would destroy all information, and the universe would end in a "heat death." That is not happening.

Many philosophers, philosophers of science, and scientists themselves, still see deterministic "laws of nature" as models for their work. The great success of Newtonian mechanics inspires them to develop mechanical, material, and energetic explanations for biological and mental processes.

In recent decades some have gone beyond classical mechanical laws to explain evolution in terms of the laws of thermodynamics and the increase of complexity. Ilya Prigogine argued that non-equilibrium thermodynamics can bring "order out of chaos." But it takes more than non-equilibrium physics. It takes the expansion of the universe, as Eddington explained..

Information is neither matter nor energy, although it needs matter to be embodied and energy to be communicated. Why should it become the preferred basis for all philosophy?

As most everyone knows, matter and energy are conserved. This means that there is just the same total amount of matter and energy today as there was at the universe origin.

But then what accounts for all the change that we see, the new things under the sun? It is information, which is not conserved and has been increasing since the beginning of time, despite the second law of thermodynamics, with its increasing entropy, which in a closed universe destroys both order and information. But our universe is open and expanding! And new information is continuously being created!

The Cosmic Creation Process

Many philosophers and scientists mistakenly think the universe must have begun with a vast amount of information, so that the information structures we have now are left over after the increasing entropy of the second law has destroyed much of the primordial information.

But the physics of the early universe, famously the first three minutes according to Steven Weinberg, shows us a state near the maximum possible entropy for the earliest moments.

How can the universe have begun in equilibrium - near maximal disorder and minimal informational, yet today be in the high state of information and order we see around us. This I have called the fundamental question of information philosophy.

The answer to this fundamental question was given to me by my Harvard colleague and mentor David Layzer in the 1970's, inspired by the work of Arthur Stanley Eddington in the 1930's. In short, it is the expansion of the universe, which continually increases the space available to the limited number of particles, giving them more room and more possibilities to arrange themselves into interesting information structures. This is the basis of a cosmic creation process for all interesting things.

Cosmic creation is only possible because the expansion of space increases faster than the gas particles can get back to equilibrium, making room for the growth of entropy and disorder, but also of order and information, in local pockets of negative entropy.

The entropy of the early universe was maximal, in equilibrium, for its time, but it was tiny compared to the actual entropy today, which is smaller than today's maximum possible entropy, making room for lots more information structures (negative entropy).

I have now found that this powerful insight was first seen by Arthur Stanley Eddington in his 1934 book New Pathways in Science, where he said (p.68) "The expansion of the universe creates new possibilities of distribution faster than the atoms can work through them."

As pointed out to me by Layzer in 1975, Eddington's arrow of time (for him, the direction of entropy increase) points not only to increasing disorder (positive entropy) but also to increasing information (negative entropy), what Layzer called "the growth of order in the universe."

At the earliest times, purely physical forces (electromagnetic, nuclear, and gravitational) changed the arrangement of the most fundamental particles of matter and energy, quarks, electrons, gluons, and photons, into information structures like atoms and molecules, and much later into planets, stars and galaxies.

Billions of years later, living things became active information structures. Living things control the flow of matter and energy through themselves and do their own arranging of their matter and energy constituents!

New immaterial information is forever emerging. We human beings are creating new ideas!

Purely physical objects like planets, stars, and galaxies are passive information structures, entirely controlled by fundamental physical forces - the strong and weak nuclear forces, electromagnetism, and gravitation. These objects do not control themselves. They are not acting. They are acted upon.

Living things, you and I, are active dynamic growing information structures, forms through which matter and energy continuously flow. And the communication of biological information controls those flows!

Some philosophers of biology (for example John Dupré and Anne Sophie Meincke) think that biological creation processes are fundamentally different from the creative processes in non-living things, in purely physical material objects, from atoms and molecules to planet, stars, and galaxies.

Before life as we know it, some information structures blindly replicated their information. Some of these replication processes were fatal mistakes, but very rarely the mistake was an improvement, with better reproductive success.

In life today, those random errors produce some of the variations followed by natural selection which adapts living things to their environments. The first stage in our two-stage model is the same blind randomness of chance in non-living and living things. What differs between these creative processes is the appearance of minds and of purpose, of goals and intentions, in the universe. All living things are agents!

Even the smallest living things develop behaviors, sensing information about and reacting to their environment, sending signals between their parts (cells, organelles) and to other living things nearby. These behaviors can be interpreted as intentions, goals, and agency, introducing purpose into the universe.

The goals and purposes of living things are not the "final goal" or purpose of Aristotle's Metaphysics that he called "telos" (τέλος). Teleology is the idea that there is a cosmic purpose that preceded the creation of the universe and which points toward an end goal. Teleology underlies many theologies, in which a creator God embodies the telos, just as a sculptor previsualizes the statue within a block of marble. Perhaps the best known is Teilhard de Chardin whose end goal he called the "Omega Point" is Jesus Christ. In many religions, the creator predestines or predetermines all the events in the universe, a theological idea that fit well with the mechanical and deterministic laws of Nature discovered by Isaac Newton in the seventeenth-century age of enlightenment.

The biologist Colin Pittendrigh coined the term teleonomy to distinguish the purpose we see in all living things from a hypothetical teleological purpose from before the origin of the universe. Jacques Monod and Ernst Mayr also stressed the important distinction between teleonomy and teleology.

Information is the modern spirit, the ghost in the machine, the mind in the body. It is the soul. When we die, it is information that perishes, unless the future preserves it. The matter remains.

Information philosophers think that if we don't remember the past, we don't deserve to be remembered by the future. This is especially true for the custodians of knowledge.

In the natural sciences the most important references are usually the most recent. In the humanities and social sciences the opposite is often true. The earliest references were invented ideas that became traditional beliefs, now deeply held without further justification.

This website is not based on the work of a single thinker. It includes the work of over five hundred philosophers and scientists, critically analyzed over seven decades by this information philosopher, with extensive quotations from the original thinkers and PDFs of major parts of their work (sometimes in the original language).

Information philosophy can explain the fundamental metaphysical connection between materialism and idealism. It replaces the determinism and metaphysical necessity of eliminative materialism and reductionist naturalism with metaphysical possibilities.

Unactualized possibilities exist in minds as immaterial ideas. They are the alternative actions and choices that are the basis for our two-stage model of free will.

The existence (perhaps metaphysical) of alternative possibilities explains how both new ideas and new species arise by chance, the consequence of quantum indeterminism

Neurobiologists question the usefulness of quantum indeterminism in the brain and mind. But it is the sometimes random firing of particular neurons and their subsequent wiring together that records an individual's experiences, experiences distinctly different in ways that contribute to every unique "self," - what it's like to be me.

Faced with a new experience, the experience recorder and reproducer (ERR) causes some neurons to "play back" those encoded past experiences that are similar in some way to the current experience. The "playback" is complete with the emotions that were attached to the original experiences. Memory of and learning from diverse past experiences provides the context that adds "meaning" to the current experience. The number of past experiences recalled may be very large.

William James described this as a "blooming, buzzing confusion." He called for us to focus attention on the alternative possibilities in his "stream of consciousness." These possibilities are the past experiences of the audience members whose hands are raised in Bernard Baars's "Theater of Consciousness," that give them something relevant to add to the conversation.

Some information enthusiasts claim that information is the fundamental stuff of the universe. It is not. The universe is fundamentally composed of discrete particles of matter and energy. Information describes the arrangement of the matter. Where the arrangement is totally random, there is no information. The organized information in living things has a purpose, to survive and to increase.

Information is the form in all discriminable concrete objects as well as the content in non-existent, merely possible, thoughts and other abstract entities. Information is the disembodied, de-materialized essence of anything.

Perhaps the most amazing thing about information philosophy is its discovery that abstract and immaterial information can exert an influence over concrete matter, explaining how mind can move body, how our thoughts can control our actions, deeply related to the mystery of how the quantum wave function (randomly) controls the probabilities of locating quantum particles.

It is immaterial information in the collapse of the two-particle wave function Ψ12 that ensures perfectly correlated measurements no matter how far entangled particles are separated.

But the random generation of alternative possibilities for thoughts and actions does not mean that our deliberations themselves are random, provided that the deliberative choice of one possible action is adequately determined.

For example, compatibilist philosophers argue that libertarians on free will cannot see that if there is a random element involved in the generation of possible actions, agents would have no control over such actions and cannot be held morally responsible. But on the contrary, if an agent chooses to flip a coin to decide on an action, that choice can still be a deliberative act, and the agent can accept full responsibility for choosing either outcome of the coin flip.

Information philosophy goes beyond a priori logic and its puzzles, beyond analytic language and its paradoxes, beyond philosophical claims of necessary truths, to a contingent physical world that is best represented as made of dynamic, interacting information structures.

The creation of new information structures exposes the error of determinism. In a deterministic universe there is no increase of information. All the past, present, and future information is present to the eyes of a super-intelligence, as Pierre-Simon Laplace argued.

Isaac Newton's classical mechanical laws of motion are not only deterministic, they are reversible in time. It is believed by many that if time could be reversed, the entire universe would proceed back in time to its earliest state, like a motion picture played backwards.

Information philosophy has discovered the origin of irreversibility in the early work on quantum mechanics by Albert Einstein. Quantum indeterminism and irreversibility in turn contribute to the origin of information structures, which we have found in the work of Arthur Stanley Eddington and David Layzer. Thirdly, quantum indeterminism and the creation of information structures are the bases for our two-stage model of free wil, which we trace back to the thought of William James.

Information is said by some to be a conserved quantity, just like matter and energy. This is not the case. Determinism is a false belief, originating either in the tragic idea that an omniscient and omnipotent God (or in the Newtonian idea that unbreakable laws of nature) completely control every event, so there can be no human freedom in a completely determined world.

Indeed, belief in determinism is the modern residue of the traditional belief in an overarching explanation - a determinative reason - for everything.

Knowledge can be defined as information in minds - a partial isomorphism of the information structures in the external world. Information philosophy is a correspondence theory.

Sadly, there is no isomorphism, no information in common, between words and objects. As the great Swiss linguist and structuralist philosopher Ferdinand de Saussure pointed out, the connection between most signifiers (words and other symbols) and the things signified (objects and concepts) is arbitrary, a connection established only by cultural convention. This arbitrariness accounts for much of the failure of analytic language philosophy in the past century.

Although language is an excellent tool for human communications, it is arbitrary, ambiguous, and ill-suited to represent the world directly. Human languages can not "picture" reality, despite the hopes of early logical positivists like Ludwig Wittgenstein.

Information is the lingua franca of the universe.

The extraordinarily sophisticated connections between words and objects are made in human minds, mediated by the brain's experience recorder and reproducer (ERR). Words stimulate neurons to start firing and to play back those experiences that include relevant objects.

Neurons that were wired together in our earliest experiences fire together at later times, contextualizing our new experiences, giving them meaning. And by replaying emotional reactions to similar earlier experiences, it makes then "subjective experiences," giving us the feeling of "what it's like to be me" and solving the "hard problem" of consciousness.

Beyond words, a dynamic information model of an information structure in the world is presented immediately to the mind as a simulation of reality experienced for itself.

Without words and related experiences previously recorded in your mental experience recorder, we could not comprehend words. They would be mere noise, with no meaning.

As pointed out to me by Layzer in 1975, Eddington's arrow of time (for him, the direction of entropy increase) points not only to increasing disorder (positive entropy) but also to increasing information (negative entropy), what Layzer called "the growth of order in the universe."

At the earliest times, purely physical forces (electromagnetic, nuclear, and gravitational) changed the arrangement of the most fundamental particles of matter and energy, quarks, electrons, gluons, and photons, into information structures like atoms and molecules, and much later into planets, stars and galaxies.

Billions of years later, living things became active information structures. Living things control the flow of matter and energy through themselves and do their own arranging of their matter and energy constituents!

New immaterial information is forever emerging. We human beings are creating new ideas!

Purely physical objects like planets, stars, and galaxies are passive information structures, entirely controlled by fundamental physical forces - the strong and weak nuclear forces, electromagnetism, and gravitation. These objects do not control themselves. They are not acting. They are acted upon.

Living things, you and I, are active dynamic growing information structures, forms through which matter and energy continuously flow. And the communication of biological information controls those flows!

Some philosophers of biology (for example John Dupré and Anne Sophie Meincke) think that biological creation processes are fundamentally different from the creative processes in non-living things, in purely physical material objects, from atoms and molecules to planet, stars, and galaxies.

Before life as we know it, some information structures blindly replicated their information. Some of these replication processes were fatal mistakes, but very rarely the mistake was an improvement, with better reproductive success.

In life today, those random errors produce some of the variations followed by natural selection which adapts living things to their environments. The first stage in our two-stage model is the same blind randomness of chance in non-living and living things. What differs between these creative processes is the appearance of minds and of purpose, of goals and intentions, in the universe. All living things are agents!

Even the smallest living things develop behaviors, sensing information about and reacting to their environment, sending signals between their parts (cells, organelles) and to other living things nearby. These behaviors can be interpreted as intentions, goals, and agency, introducing purpose into the universe.

The goals and purposes of living things are not the "final goal" or purpose of Aristotle's Metaphysics that he called "telos" (τέλος). Teleology is the idea that there is a cosmic purpose that preceded the creation of the universe and which points toward an end goal. Teleology underlies many theologies, in which a creator God embodies the telos, just as a sculptor previsualizes the statue within a block of marble. Perhaps the best known is Teilhard de Chardin whose end goal he called the "Omega Point" is Jesus Christ. In many religions, the creator predestines or predetermines all the events in the universe, a theological idea that fit well with the mechanical and deterministic laws of Nature discovered by Isaac Newton in the seventeenth-century age of enlightenment.

The biologist Colin Pittendrigh coined the term teleonomy to distinguish the purpose we see in all living things from a hypothetical teleological purpose from before the origin of the universe. Jacques Monod and Ernst Mayr also stressed the important distinction between teleonomy and teleology.

Information is the modern spirit, the ghost in the machine, the mind in the body. It is the soul. When we die, it is information that perishes, unless the future preserves it. The matter remains.

Information philosophers think that if we don't remember the past, we don't deserve to be remembered by the future. This is especially true for the custodians of knowledge.

In the natural sciences the most important references are usually the most recent. In the humanities and social sciences the opposite is often true. The earliest references were invented ideas that became traditional beliefs, now deeply held without further justification.

This website is not based on the work of a single thinker. It includes the work of over five hundred philosophers and scientists, critically analyzed over seven decades by this information philosopher, with extensive quotations from the original thinkers and PDFs of major parts of their work (sometimes in the original language).

Information philosophy can explain the fundamental metaphysical connection between materialism and idealism. It replaces the determinism and metaphysical necessity of eliminative materialism and reductionist naturalism with metaphysical possibilities.

Unactualized possibilities exist in minds as immaterial ideas. They are the alternative actions and choices that are the basis for our two-stage model of free will.

The existence (perhaps metaphysical) of alternative possibilities explains how both new ideas and new species arise by chance, the consequence of quantum indeterminism

Neurobiologists question the usefulness of quantum indeterminism in the brain and mind. But it is the sometimes random firing of particular neurons and their subsequent wiring together that records an individual's experiences, experiences distinctly different in ways that contribute to every unique "self," - what it's like to be me.

Faced with a new experience, the experience recorder and reproducer (ERR) causes some neurons to "play back" those encoded past experiences that are similar in some way to the current experience. The "playback" is complete with the emotions that were attached to the original experiences. Memory of and learning from diverse past experiences provides the context that adds "meaning" to the current experience. The number of past experiences recalled may be very large.

William James described this as a "blooming, buzzing confusion." He called for us to focus attention on the alternative possibilities in his "stream of consciousness." These possibilities are the past experiences of the audience members whose hands are raised in Bernard Baars's "Theater of Consciousness," that give them something relevant to add to the conversation.

Some information enthusiasts claim that information is the fundamental stuff of the universe. It is not. The universe is fundamentally composed of discrete particles of matter and energy. Information describes the arrangement of the matter. Where the arrangement is totally random, there is no information. The organized information in living things has a purpose, to survive and to increase.

Information is the form in all discriminable concrete objects as well as the content in non-existent, merely possible, thoughts and other abstract entities. Information is the disembodied, de-materialized essence of anything.

Perhaps the most amazing thing about information philosophy is its discovery that abstract and immaterial information can exert an influence over concrete matter, explaining how mind can move body, how our thoughts can control our actions, deeply related to the mystery of how the quantum wave function (randomly) controls the probabilities of locating quantum particles.

It is immaterial information in the collapse of the two-particle wave function Ψ12 that ensures perfectly correlated measurements no matter how far entangled particles are separated.

But the random generation of alternative possibilities for thoughts and actions does not mean that our deliberations themselves are random, provided that the deliberative choice of one possible action is adequately determined.

For example, compatibilist philosophers argue that libertarians on free will cannot see that if there is a random element involved in the generation of possible actions, agents would have no control over such actions and cannot be held morally responsible. But on the contrary, if an agent chooses to flip a coin to decide on an action, that choice can still be a deliberative act, and the agent can accept full responsibility for choosing either outcome of the coin flip.

Information philosophy goes beyond a priori logic and its puzzles, beyond analytic language and its paradoxes, beyond philosophical claims of necessary truths, to a contingent physical world that is best represented as made of dynamic, interacting information structures.

The creation of new information structures exposes the error of determinism. In a deterministic universe there is no increase of information. All the past, present, and future information is present to the eyes of a super-intelligence, as Pierre-Simon Laplace argued.

Isaac Newton's classical mechanical laws of motion are not only deterministic, they are reversible in time. It is believed by many that if time could be reversed, the entire universe would proceed back in time to its earliest state, like a motion picture played backwards.