|

Philosophers

Mortimer Adler Rogers Albritton Alexander of Aphrodisias Samuel Alexander William Alston Anaximander G.E.M.Anscombe Anselm Louise Antony Thomas Aquinas Aristotle David Armstrong Harald Atmanspacher Robert Audi Augustine J.L.Austin A.J.Ayer Alexander Bain Mark Balaguer Jeffrey Barrett William Barrett William Belsham Henri Bergson George Berkeley Isaiah Berlin Richard J. Bernstein Bernard Berofsky Robert Bishop Max Black Susanne Bobzien Emil du Bois-Reymond Hilary Bok Laurence BonJour George Boole Émile Boutroux Daniel Boyd F.H.Bradley C.D.Broad Michael Burke Lawrence Cahoone C.A.Campbell Joseph Keim Campbell Rudolf Carnap Carneades Nancy Cartwright Gregg Caruso Ernst Cassirer David Chalmers Roderick Chisholm Chrysippus Cicero Tom Clark Randolph Clarke Samuel Clarke Anthony Collins Antonella Corradini Diodorus Cronus Jonathan Dancy Donald Davidson Mario De Caro Democritus Daniel Dennett Jacques Derrida René Descartes Richard Double Fred Dretske John Dupré John Earman Laura Waddell Ekstrom Epictetus Epicurus Austin Farrer Herbert Feigl Arthur Fine John Martin Fischer Frederic Fitch Owen Flanagan Luciano Floridi Philippa Foot Alfred Fouilleé Harry Frankfurt Richard L. Franklin Bas van Fraassen Michael Frede Gottlob Frege Peter Geach Edmund Gettier Carl Ginet Alvin Goldman Gorgias Nicholas St. John Green H.Paul Grice Ian Hacking Ishtiyaque Haji Stuart Hampshire W.F.R.Hardie Sam Harris William Hasker R.M.Hare Georg W.F. Hegel Martin Heidegger Heraclitus R.E.Hobart Thomas Hobbes David Hodgson Shadsworth Hodgson Baron d'Holbach Ted Honderich Pamela Huby David Hume Ferenc Huoranszki Frank Jackson William James Lord Kames Robert Kane Immanuel Kant Tomis Kapitan Walter Kaufmann Jaegwon Kim William King Hilary Kornblith Christine Korsgaard Saul Kripke Thomas Kuhn Andrea Lavazza Christoph Lehner Keith Lehrer Gottfried Leibniz Jules Lequyer Leucippus Michael Levin Joseph Levine George Henry Lewes C.I.Lewis David Lewis Peter Lipton C. Lloyd Morgan John Locke Michael Lockwood Arthur O. Lovejoy E. Jonathan Lowe John R. Lucas Lucretius Alasdair MacIntyre Ruth Barcan Marcus Tim Maudlin James Martineau Nicholas Maxwell Storrs McCall Hugh McCann Colin McGinn Michael McKenna Brian McLaughlin John McTaggart Paul E. Meehl Uwe Meixner Alfred Mele Trenton Merricks John Stuart Mill Dickinson Miller G.E.Moore Thomas Nagel Otto Neurath Friedrich Nietzsche John Norton P.H.Nowell-Smith Robert Nozick William of Ockham Timothy O'Connor Parmenides David F. Pears Charles Sanders Peirce Derk Pereboom Steven Pinker Plato Karl Popper Porphyry Huw Price H.A.Prichard Protagoras Hilary Putnam Willard van Orman Quine Frank Ramsey Ayn Rand Michael Rea Thomas Reid Charles Renouvier Nicholas Rescher C.W.Rietdijk Richard Rorty Josiah Royce Bertrand Russell Paul Russell Gilbert Ryle Jean-Paul Sartre Kenneth Sayre T.M.Scanlon Moritz Schlick Arthur Schopenhauer John Searle Wilfrid Sellars Alan Sidelle Ted Sider Henry Sidgwick Walter Sinnott-Armstrong J.J.C.Smart Saul Smilansky Michael Smith Baruch Spinoza L. Susan Stebbing Isabelle Stengers George F. Stout Galen Strawson Peter Strawson Eleonore Stump Francisco Suárez Richard Taylor Kevin Timpe Mark Twain Peter Unger Peter van Inwagen Manuel Vargas John Venn Kadri Vihvelin Voltaire G.H. von Wright David Foster Wallace R. Jay Wallace W.G.Ward Ted Warfield Roy Weatherford C.F. von Weizsäcker William Whewell Alfred North Whitehead David Widerker David Wiggins Bernard Williams Timothy Williamson Ludwig Wittgenstein Susan Wolf Scientists David Albert Michael Arbib Walter Baade Bernard Baars Jeffrey Bada Leslie Ballentine Marcello Barbieri Gregory Bateson Horace Barlow John S. Bell Mara Beller Charles Bennett Ludwig von Bertalanffy Susan Blackmore Margaret Boden David Bohm Niels Bohr Ludwig Boltzmann Emile Borel Max Born Satyendra Nath Bose Walther Bothe Jean Bricmont Hans Briegel Leon Brillouin Stephen Brush Henry Thomas Buckle S. H. Burbury Melvin Calvin Donald Campbell Sadi Carnot Anthony Cashmore Eric Chaisson Gregory Chaitin Jean-Pierre Changeux Rudolf Clausius Arthur Holly Compton John Conway Jerry Coyne John Cramer Francis Crick E. P. Culverwell Antonio Damasio Olivier Darrigol Charles Darwin Richard Dawkins Terrence Deacon Lüder Deecke Richard Dedekind Louis de Broglie Stanislas Dehaene Max Delbrück Abraham de Moivre Bernard d'Espagnat Paul Dirac Hans Driesch John Eccles Arthur Stanley Eddington Gerald Edelman Paul Ehrenfest Manfred Eigen Albert Einstein George F. R. Ellis Hugh Everett, III Franz Exner Richard Feynman R. A. Fisher David Foster Joseph Fourier Philipp Frank Steven Frautschi Edward Fredkin Benjamin Gal-Or Howard Gardner Lila Gatlin Michael Gazzaniga Nicholas Georgescu-Roegen GianCarlo Ghirardi J. Willard Gibbs James J. Gibson Nicolas Gisin Paul Glimcher Thomas Gold A. O. Gomes Brian Goodwin Joshua Greene Dirk ter Haar Jacques Hadamard Mark Hadley Patrick Haggard J. B. S. Haldane Stuart Hameroff Augustin Hamon Sam Harris Ralph Hartley Hyman Hartman Jeff Hawkins John-Dylan Haynes Donald Hebb Martin Heisenberg Werner Heisenberg John Herschel Basil Hiley Art Hobson Jesper Hoffmeyer Don Howard John H. Jackson William Stanley Jevons Roman Jakobson E. T. Jaynes Pascual Jordan Eric Kandel Ruth E. Kastner Stuart Kauffman Martin J. Klein William R. Klemm Christof Koch Simon Kochen Hans Kornhuber Stephen Kosslyn Daniel Koshland Ladislav Kovàč Leopold Kronecker Rolf Landauer Alfred Landé Pierre-Simon Laplace Karl Lashley David Layzer Joseph LeDoux Gerald Lettvin Gilbert Lewis Benjamin Libet David Lindley Seth Lloyd Hendrik Lorentz Werner Loewenstein Josef Loschmidt Ernst Mach Donald MacKay Henry Margenau Owen Maroney David Marr Humberto Maturana James Clerk Maxwell Ernst Mayr John McCarthy Warren McCulloch N. David Mermin George Miller Stanley Miller Ulrich Mohrhoff Jacques Monod Vernon Mountcastle Emmy Noether Donald Norman Alexander Oparin Abraham Pais Howard Pattee Wolfgang Pauli Massimo Pauri Wilder Penfield Roger Penrose Steven Pinker Colin Pittendrigh Walter Pitts Max Planck Susan Pockett Henri Poincaré Daniel Pollen Ilya Prigogine Hans Primas Zenon Pylyshyn Henry Quastler Adolphe Quételet Pasco Rakic Nicolas Rashevsky Lord Rayleigh Frederick Reif Jürgen Renn Giacomo Rizzolati A.A. Roback Emil Roduner Juan Roederer Jerome Rothstein David Ruelle David Rumelhart Robert Sapolsky Tilman Sauer Ferdinand de Saussure Jürgen Schmidhuber Erwin Schrödinger Aaron Schurger Sebastian Seung Thomas Sebeok Franco Selleri Claude Shannon Charles Sherrington David Shiang Abner Shimony Herbert Simon Dean Keith Simonton Edmund Sinnott B. F. Skinner Lee Smolin Ray Solomonoff Roger Sperry John Stachel Henry Stapp Tom Stonier Antoine Suarez Leo Szilard Max Tegmark Teilhard de Chardin Libb Thims William Thomson (Kelvin) Richard Tolman Giulio Tononi Peter Tse Alan Turing Francisco Varela Vlatko Vedral Mikhail Volkenstein Heinz von Foerster Richard von Mises John von Neumann Jakob von Uexküll C. S. Unnikrishnan C. H. Waddington John B. Watson Daniel Wegner Steven Weinberg Paul A. Weiss Herman Weyl John Wheeler Wilhelm Wien Norbert Wiener Eugene Wigner E. O. Wilson Günther Witzany Stephen Wolfram H. Dieter Zeh Semir Zeki Ernst Zermelo Wojciech Zurek Konrad Zuse Fritz Zwicky Presentations Biosemiotics Free Will Mental Causation James Symposium |

J. Willard Gibbs

Josiah Willard Gibbs earned the first American Ph.D. in Engineering from Yale in 1863. He went to France in 1869 where he studied with the great Joseph Liouville, who formulated the theorem that the phase-space volume of a system evolving under a conservative Hamiltonian function is a constant along the system's trajectory. The fluid inside the original phase-space volume is said to be incompressible. The Liouville theorem may not apply to gases if collisions are not time reversible, if the particle collisions do not preserve path information.

Gibbs then travelled to Germany, where in Heidelberg he learned about the work of Rudolf Clausius, Hermann von Helmholtz, and Gustav Kirchhoff in physics, and Robert Bunsen in chemistry.

Back in New Haven, Gibbs published several long monographs.

Gibbs focused on five thermodynamical variables, volume, pressure, temperature, energy, and entropy. He showed that any three of these are independent variables, from which one can deduce the other two.

In 1873, his first monograph introduced new diagrams relating thermodynamical quantities to one another. In Graphical Methods in the Thermodynamics of Fluids, Gibbs explored various two-dimensional planar graphs showing two of these independent variables to exhibit thermodynamic properties. He first cites the success of the pressure-volume graphs that are most often used to illustrate the thermodynamics of the Carnot Cycle. Émile Clapeyron had in 1934 first drawn such diagrams. Clausius had also used them in 1865.

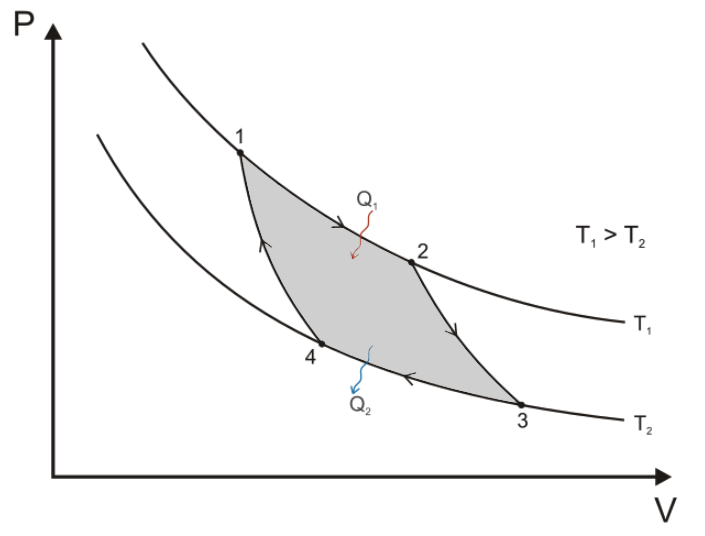

In the Carnot Cycle, the path 1>2 is an isothermal at the higher (source) temperature T1. Path 2>3 is usually called adiabatic, though Gibbs prefers isoentropic. Path 3>4 is isothermal at the lower (sink) temperature, and the return path 4>1 is also isoentropic.

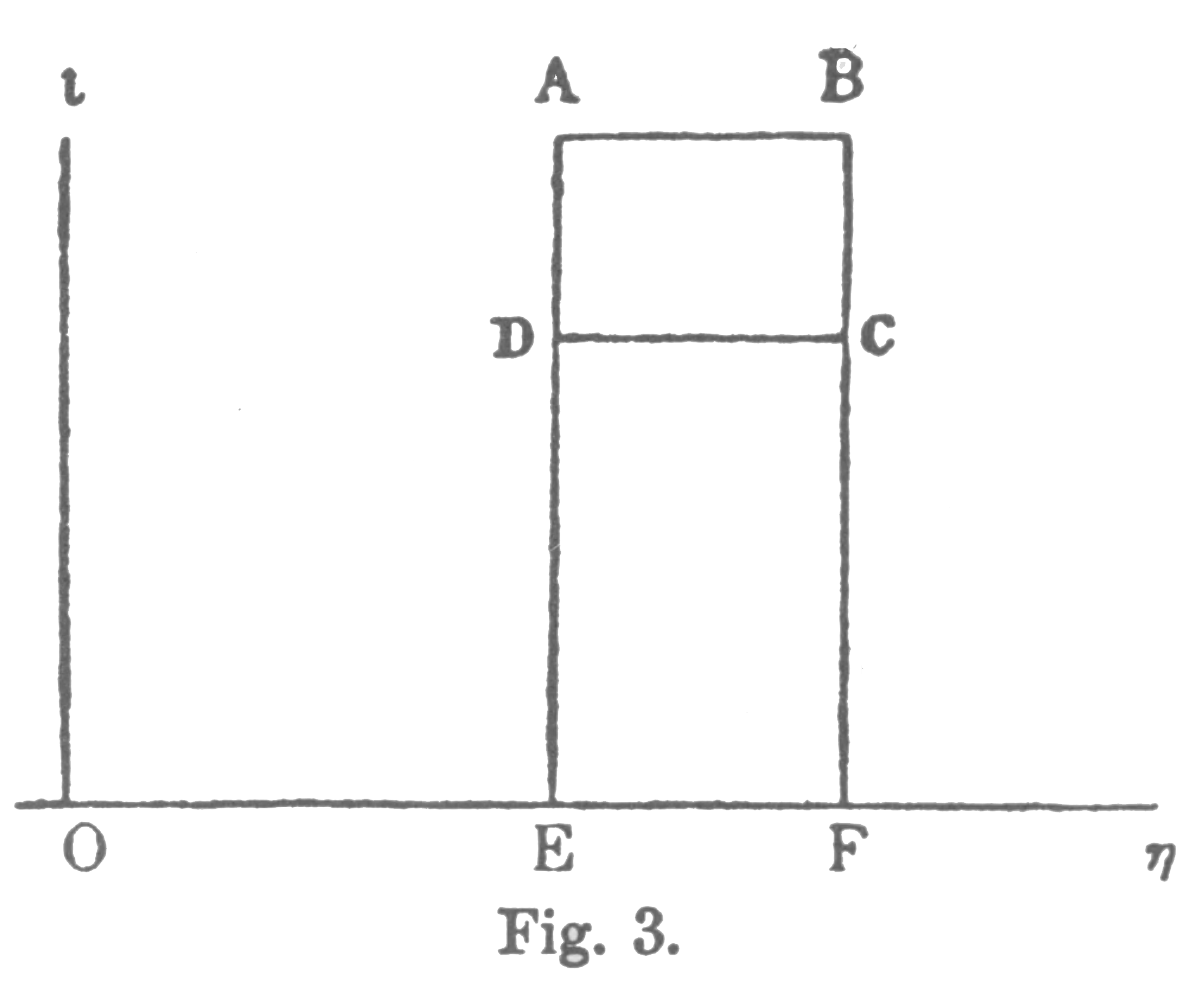

It is worthy of notice that the simplest form of a perfect thermodynamic engine, so often described in treatises on thermodynamics, is represented in the entropy-temperature diagram by a figure of extreme simplicity, viz: a rectangle of which the sides are parallel to the co-ordinate axes.Gibbs explains why an entropy-temperature graph is superior to pressure-volume. It is a "geometrical expression," a visualization of the second law of thermodynamics. Entropy, and "Negative Entropy" (cf., Information), are very difficult concepts to explain. He worries that entropy and the second law "may repel beginners as obscure and difficult of comprehension." They are usually presented with equations, which many non-scientists find difficult. After this first monograph, Gibbs fills his pages with dense equations. When the alternative is to use words, Gibbs says they are clumsy. It is sad that he did not continue his popularizing of this science with these simple diagrams... The method in which the co-ordinates represent volume and pressure has a certain advantage in the simple and elementary character of the notions upon which it is based, and its analogy with Watt’s indicator has doubtless contributed to render it popular. On the other hand, a method involving the notion of entropy, the very existence of which depends upon the second law of thermodynamics, will doubtless seem to many far-fetched, and may repel beginners as obscure and difficult of comprehension. This inconvenience is perhaps more than counter-balanced by the advantages of a method which makes the second law of thermodynamics so prominent, and gives it so clear and elementary an expression.

Available Energy and Information

In his second monograph, also published in 1873, Gibbs introduces two terms that have come to dominate modern discussions, "dissipated" and "available" energy. He writes...

For example, let it be required to find the greatest amount of mechanical work which can be obtained from a given quantity of a certain substance in a given initial state, without increasing its total volume or allowing heat to pass to or from external bodies, except such as at the close of the processes are left in their initial condition. This has been called the available energy of the body. The initial state of the body is supposed to be such that the body can be made to pass from it to states of dissipated energy by reversible processes.Gibbs does not give us another graphical representation of these kinds of energy, but we can broadly identify "available" energy with area ABCD, labelled W, the work done above, and "dissipated" energy with CDEF, the waste heat sent to the low temperate sink. Note that heat from the high temperature source is ABFE, the sum of ABCD and CDEF. In familiar modern terminology, the heat, or original energy content, is transformed into work and waste energy

dQ = dW + TdS

Today we call the energy available to do work the Gibbs "Free Energy."

G = U - TS,

where U is the total energy.

So what is the connection between available "free" energy and information structures? Clearly, in a state of thermal equilibrium there is nothing of the "order" we associate with information. Equilibrium is the ultimate "disorder."

In our explanation of the two-step cosmic creation process, the second step is exporting positive entropy away from the newly formed information structure. In the first step, available energy, or work, is essential for arranging the particles into a structure, usually one among many possible arrangements.

In the early universe, the arrangement is controlled by quantum cooperative phenomena with electrostatic attractive and nuclear repulsive forces. In the subsequent billions of years, the formation of planets, star, and galaxies is controlled by gravitational forces. Work is done by these forces as structural components are pulled together. The new configurations cannot be stable entities unless positive entropy is radiated away to satisfy the second law.

These information creation processes do not directly resemble the thermodynamic engines that Gibbs is discussing, with their obvious source of energy and heat sinks. But we can see the earliest universe as a cosmic source of high energy particles and radiation. We can locate the cold sink inside the universe, since there is no "outside." The sink for waste energy is the expanding space itself. Today we can see clearly across vast empty space to the uniform cosmic microwave background radiation at under three degrees Kelvin.

The resemblance to a thermodynamic engine is easier to see for our Earth. The hot source is our Sun, whose radiation leaves the sun at a temperature of thousands of degrees. When it reaches Earth, its energy content temperature is only hundreds of degrees, and when it is thermalized by the planet, it is radiated away from the dark side of Earth into the night sky,

Erwin Schrödinger described the Sun as the source of "negative entropy" on which "life feeds." He did not know how the Sun itself could get so far from equilibrium to be the source of available energy. That we explain by the expansion of the universe.

The cosmological and astrophysical "engines" are doing work not by extracting available energy from a hot gas or liquid and dumping waste energy as a material stream. They are doing work with forces that are action-at-a-distance. They are exporting their positive entropy by radiating it away to the empty space appearing between the information structures.

Gibbs' third monograph, in 1876, "On the Equilibrium of Heterogeneous Substances," began with Clausius' great first and second laws of thermodynamics in two simple sentences

"Die Energie der Welt ist constant. Die Entropie der Welt strebt einen Maximum zu."Gibbs' "great memoir," as Lewis and Randall called it in 1923, contains a brief but careful explanation of what later writers called the "Gibbs Paradox" (pp.163-165). E. T. Jaynes said in 1992 it came as a "shock" that Gibbs' explanation had been missed by textbook writers for 80 years? This passage also includes probably the most famous quote about the idea of spontaneous entropy decrease, one cited in hundreds of textbooks, starting with Boltzmann in 1898, and including Lewis and Randall's chapter 8, Entropy and Probability. Gibbs wrote... we may easily calculate the increase of entropy which takes place when two different gases are mixed by diffusion, at a constant temperature and pressure. Let us suppose that the quantities of the gases are such that each occupies initially one half of the total volume. If we denote this volume by V, the increase of entropy will be...Ludwig Boltzmann must have thought this passage extremely important. He used the last line as the opening quotation for the second volume of his Lectures on Gas Theory, "the impossibility of an uncompensated decrease of entropy seems to be reduced to improbability." It was Gibbs' short text Principles in Statistical Mechanics published the year before his death in 1903 that brought him the most fame. In it, he coined the term phase space, phase volume, his phase rule and the name for his field - statistical mechanics. Earlier he named the chemical potential and the statistical ensemble. He showed how his graph planes can become the surfaces of three-dimensional objects that identify "phase changes," between gases and liquids, and between liquids and solids.

References

Graphical Methods in the Thermodynamics of Fluids

Gibbs "Paradox"

Gibbs paradox and its resolutions (a great list of references, but links are all dead)

Wikipedia

Stanford Encyclopedia of Philosophy

Normal | Teacher | Scholar

|