|

Topics

Introduction

Problems Freedom Knowledge Mind Life Chance Quantum Entanglement Scandals Philosophers Mortimer Adler Rogers Albritton Alexander of Aphrodisias Samuel Alexander William Alston Anaximander G.E.M.Anscombe Anselm Louise Antony Thomas Aquinas Aristotle David Armstrong Harald Atmanspacher Robert Audi Augustine J.L.Austin A.J.Ayer Alexander Bain Mark Balaguer Jeffrey Barrett William Barrett William Belsham Henri Bergson George Berkeley Isaiah Berlin Richard J. Bernstein Bernard Berofsky Robert Bishop Max Black Susan Blackmore Susanne Bobzien Emil du Bois-Reymond Hilary Bok Laurence BonJour George Boole Émile Boutroux Daniel Boyd F.H.Bradley C.D.Broad Michael Burke Jeremy Butterfield Lawrence Cahoone C.A.Campbell Joseph Keim Campbell Rudolf Carnap Carneades Nancy Cartwright Gregg Caruso Ernst Cassirer David Chalmers Roderick Chisholm Chrysippus Cicero Tom Clark Randolph Clarke Samuel Clarke Anthony Collins August Compte Antonella Corradini Diodorus Cronus Jonathan Dancy Donald Davidson Mario De Caro Democritus William Dembski Brendan Dempsey Daniel Dennett Jacques Derrida René Descartes Richard Double Fred Dretske Curt Ducasse John Earman Laura Waddell Ekstrom Epictetus Epicurus Austin Farrer Herbert Feigl Arthur Fine John Martin Fischer Frederic Fitch Owen Flanagan Luciano Floridi Philippa Foot Alfred Fouilleé Harry Frankfurt Richard L. Franklin Bas van Fraassen Michael Frede Gottlob Frege Peter Geach Edmund Gettier Carl Ginet Alvin Goldman Gorgias Nicholas St. John Green Niels Henrik Gregersen H.Paul Grice Ian Hacking Ishtiyaque Haji Stuart Hampshire W.F.R.Hardie Sam Harris William Hasker R.M.Hare Georg W.F. Hegel Martin Heidegger Heraclitus R.E.Hobart Thomas Hobbes David Hodgson Shadsworth Hodgson Baron d'Holbach Ted Honderich Pamela Huby David Hume Ferenc Huoranszki Frank Jackson William James Lord Kames Robert Kane Immanuel Kant Tomis Kapitan Walter Kaufmann Jaegwon Kim William King Hilary Kornblith Christine Korsgaard Saul Kripke Thomas Kuhn Andrea Lavazza James Ladyman Christoph Lehner Keith Lehrer Gottfried Leibniz Jules Lequyer Leucippus Michael Levin Joseph Levine George Henry Lewes C.I.Lewis David Lewis Peter Lipton C. Lloyd Morgan John Locke Michael Lockwood Arthur O. Lovejoy E. Jonathan Lowe John R. Lucas Lucretius Alasdair MacIntyre Ruth Barcan Marcus Tim Maudlin James Martineau Nicholas Maxwell Storrs McCall Hugh McCann Colin McGinn Michael McKenna Brian McLaughlin John McTaggart Paul E. Meehl Uwe Meixner Alfred Mele Trenton Merricks John Stuart Mill Dickinson Miller G.E.Moore Ernest Nagel Thomas Nagel Otto Neurath Friedrich Nietzsche John Norton P.H.Nowell-Smith Robert Nozick William of Ockham Timothy O'Connor Parmenides David F. Pears Charles Sanders Peirce Derk Pereboom Steven Pinker U.T.Place Plato Karl Popper Porphyry Huw Price H.A.Prichard Protagoras Hilary Putnam Willard van Orman Quine Frank Ramsey Ayn Rand Michael Rea Thomas Reid Charles Renouvier Nicholas Rescher C.W.Rietdijk Richard Rorty Josiah Royce Bertrand Russell Paul Russell Gilbert Ryle Jean-Paul Sartre Kenneth Sayre T.M.Scanlon Moritz Schlick John Duns Scotus Albert Schweitzer Arthur Schopenhauer John Searle Wilfrid Sellars David Shiang Alan Sidelle Ted Sider Henry Sidgwick Walter Sinnott-Armstrong Peter Slezak J.J.C.Smart Saul Smilansky Michael Smith Baruch Spinoza L. Susan Stebbing Isabelle Stengers George F. Stout Galen Strawson Peter Strawson Eleonore Stump Francisco Suárez Richard Taylor Kevin Timpe Mark Twain Peter Unger Peter van Inwagen Manuel Vargas John Venn Kadri Vihvelin Voltaire G.H. von Wright David Foster Wallace R. Jay Wallace W.G.Ward Ted Warfield Roy Weatherford C.F. von Weizsäcker William Whewell Alfred North Whitehead David Widerker David Wiggins Bernard Williams Timothy Williamson Ludwig Wittgenstein Susan Wolf Xenophon Scientists David Albert Philip W. Anderson Michael Arbib Bobby Azarian Walter Baade Bernard Baars Jeffrey Bada Leslie Ballentine Marcello Barbieri Jacob Barandes Julian Barbour Horace Barlow Gregory Bateson Jakob Bekenstein John S. Bell Mara Beller Charles Bennett Ludwig von Bertalanffy Susan Blackmore Margaret Boden David Bohm Niels Bohr Ludwig Boltzmann John Tyler Bonner Emile Borel Max Born Satyendra Nath Bose Walther Bothe Jean Bricmont Hans Briegel Leon Brillouin Daniel Brooks Stephen Brush Henry Thomas Buckle S. H. Burbury Melvin Calvin William Calvin Donald Campbell John O. Campbell Sadi Carnot Sean B. Carroll Anthony Cashmore Eric Chaisson Gregory Chaitin Jean-Pierre Changeux Rudolf Clausius Arthur Holly Compton John Conway Simon Conway-Morris Peter Corning George Cowan Jerry Coyne John Cramer Francis Crick E. P. Culverwell Antonio Damasio Olivier Darrigol Charles Darwin Paul Davies Richard Dawkins Terrence Deacon Lüder Deecke Richard Dedekind Louis de Broglie Stanislas Dehaene Max Delbrück Abraham de Moivre David Depew Bernard d'Espagnat Paul Dirac Theodosius Dobzhansky Hans Driesch John Dupré John Eccles Arthur Stanley Eddington Gerald Edelman Paul Ehrenfest Manfred Eigen Albert Einstein George F. R. Ellis Walter Elsasser Hugh Everett, III Franz Exner Richard Feynman R. A. Fisher David Foster Joseph Fourier George Fox Philipp Frank Steven Frautschi Edward Fredkin Augustin-Jean Fresnel Karl Friston Benjamin Gal-Or Howard Gardner Lila Gatlin Michael Gazzaniga Nicholas Georgescu-Roegen GianCarlo Ghirardi J. Willard Gibbs James J. Gibson Nicolas Gisin Paul Glimcher Thomas Gold A. O. Gomes Brian Goodwin Julian Gough Joshua Greene Dirk ter Haar Jacques Hadamard Mark Hadley Ernst Haeckel Patrick Haggard J. B. S. Haldane Stuart Hameroff Augustin Hamon Sam Harris Ralph Hartley Hyman Hartman Jeff Hawkins John-Dylan Haynes Donald Hebb Martin Heisenberg Werner Heisenberg Hermann von Helmholtz Grete Hermann John Herschel Francis Heylighen Basil Hiley Art Hobson Jesper Hoffmeyer John Holland Don Howard John H. Jackson Ray Jackendoff Roman Jakobson E. T. Jaynes William Stanley Jevons Pascual Jordan Eric Kandel Ruth E. Kastner Stuart Kauffman Martin J. Klein William R. Klemm Christof Koch Simon Kochen Hans Kornhuber Stephen Kosslyn Daniel Koshland Ladislav Kovàč Leopold Kronecker Bernd-Olaf Küppers Rolf Landauer Alfred Landé Pierre-Simon Laplace Karl Lashley David Layzer Joseph LeDoux Gerald Lettvin Michael Levin Gilbert Lewis Benjamin Libet David Lindley Seth Lloyd Werner Loewenstein Hendrik Lorentz Josef Loschmidt Alfred Lotka Ernst Mach Donald MacKay Henry Margenau Lynn Margulis Owen Maroney David Marr Humberto Maturana James Clerk Maxwell John Maynard Smith Ernst Mayr John McCarthy Barbara McClintock Warren McCulloch N. David Mermin George Miller Stanley Miller Ulrich Mohrhoff Jacques Monod Vernon Mountcastle Gerd B. Müller Emmy Noether Denis Noble Donald Norman Travis Norsen Howard T. Odum Alexander Oparin Abraham Pais Howard Pattee Wolfgang Pauli Massimo Pauri Wilder Penfield Roger Penrose Massimo Pigliucci Steven Pinker Colin Pittendrigh Walter Pitts Max Planck Susan Pockett Henri Poincaré Michael Polanyi Daniel Pollen Ilya Prigogine Hans Primas Giulio Prisco Zenon Pylyshyn Henry Quastler Adolphe Quételet Pasco Rakic Nicolas Rashevsky Lord Rayleigh Frederick Reif Jürgen Renn Giacomo Rizzolati A.A. Roback Emil Roduner Juan Roederer Robert Rosen Frank Rosenblatt Jerome Rothstein David Ruelle David Rumelhart Michael Ruse Stanley Salthe Robert Sapolsky Tilman Sauer Ferdinand de Saussure Jürgen Schmidhuber Erwin Schrödinger Aaron Schurger Sebastian Seung Thomas Sebeok Franco Selleri Claude Shannon James A. Shapiro Charles Sherrington Abner Shimony Herbert Simon Dean Keith Simonton Edmund Sinnott B. F. Skinner Lee Smolin Ray Solomonoff Herbert Spencer Roger Sperry John Stachel Kenneth Stanley Henry Stapp Ian Stewart Tom Stonier Antoine Suarez Leonard Susskind Leo Szilard Max Tegmark Teilhard de Chardin Libb Thims William Thomson (Kelvin) Richard Tolman Giulio Tononi Peter Tse Alan Turing Robert Ulanowicz C. S. Unnikrishnan Nico van Kampen Francisco Varela Vlatko Vedral Vladimir Vernadsky Clément Vidal Mikhail Volkenstein Heinz von Foerster Richard von Mises John von Neumann Jakob von Uexküll C. H. Waddington Sara Imari Walker James D. Watson John B. Watson Daniel Wegner Steven Weinberg August Weismann Paul A. Weiss Herman Weyl John Wheeler Jeffrey Wicken Wilhelm Wien Norbert Wiener Eugene Wigner E. O. Wiley E. O. Wilson Günther Witzany Carl Woese Stephen Wolfram H. Dieter Zeh Semir Zeki Ernst Zermelo Wojciech Zurek Konrad Zuse Fritz Zwicky Presentations ABCD Harvard (ppt) Biosemiotics Free Will Mental Causation James Symposium CCS25 Talk Evo Devo September 12 Evo Devo October 2 Evo Devo Goodness Evo Devo Davies Nov12 |

Erwin Schrödinger

Erwin Schrödinger is perhaps the most complex figure in twentieth-century discussions of quantum mechanical uncertainty, ontological chance, indeterminism, and the statistical interpretation of quantum mechanics.

In his early career, Schrödinger was a great exponent of fundamental chance in the universe. He followed his teacher Franz S. Exner, who was himself a colleague of the great Ludwig Boltzmann at the University of Vienna. Boltzmann used intrinsic randomness in molecular collisions (molecular chaos) to derive the increasing entropy of the Second Law of Thermodynamics. The macroscopic irreversibility of entropy increase depends on Boltzmann's molecular chaos which depends on the randomness in microscopic irreversibility.

Before the twentieth century, most physicists, mathematicians, and philosophers believed that the chance described by the calculus of probabilities was actually completely determined. The "bell curve" or "normal distribution" of random outcomes was itself so consistent that they argued for underlying deterministic laws governing individual events. They thought that we simply lack the knowledge necessary to make exact predictions for these individual events. Pierre-Simon Laplace was first to see in his "calculus of probabilities" a universal law that determined the motions of everything from the largest astronomical objects to the smallest particles. In a Laplacian world, there is only one possible future.

On the other hand, in his inaugural lecture at Zurich in 1922, Schrödinger argued that evidence did not justify our assumptions that physical laws were deterministic and strictly causal. His inaugural lecture was modeled on that of Franz Serafin Exner in Vienna in 1908.

"Exner's assertion amounts to this: It is quite possible that Nature's laws are of thoroughly statistical character. The demand for an absolute law in the background of the statistical law — a demand which at the present day almost everybody considers imperative — goes beyond the reach of experience. Such a dual foundation for the orderly course of events in Nature is in itself improbable. The burden of proof falls on those who champion absolute causality, and not on those who question it. For a doubtful attitude in this respect is to-day by far the more natural." Several years later, Schrödinger presented a paper on "Indeterminism in Physics" to the June, 1931 Congress of A Society for Philosophical Instruction in Berlin.

"Fifty years ago it was simply a matter of taste or philosophic prejudice whether the preference was given to determinism or indeterminism. The former was favored by ancient custom, or possibly by an a priori belief. In favor of the latter it could be urged that this ancient habit demonstrably rested on the actual laws which we observe functioning in our surroundings. As soon, however, as the great majority or possibly all of these laws are seen to be of a statistical nature, they cease to provide a rational argument for the retention of determinism.

Despite these strong arguments against determinism, just after he completed the wave mechanical formulation of quantum mechanics in June 1926 (the year Exner died), Schrödinger began to side with the determinists, including especially Max Planck and Albert Einstein (who in 1916 had discovered that ontological chance is involved in the emission of radiation).

Schrödinger's wave equation is a continuous function that evolves smoothly in time, in sharp contrast to the discrete, discontinuous, and indeterministic "quantum jumps" of the Born-Heisenberg matrix mechanics. His wave equation seemed to Schrödinger to restore the continuous and deterministic nature of classical mechanics and dynamics. And it suggests that we may visualize particles as wave packets moving in spacetime, which was very important to Schrödinger. By contrast, Bohr and Heisenberg and their Copenhagen Interpretation of quantum mechanics insisted that visualization of quantum events is simply not possible. Einstein later agreed with Schrödinger that visualization (Anschaulichkeit) should be the goal of describing an "objective" reality.

Max Born, Werner Heisenberg's mentor and the senior partner in the team that created matrix mechanics, shocked Schrödinger with the interpretation of the wave function as a "probability amplitude."

Discouraged, Schrödinger wrote to his friend Willie Wien in August 1926

The motions of particles are indeterministic and probabilistic, even if the equation of motion for the probability is deterministic.

It is true, said Born, that the wave function itself evolves deterministically, but its significance is that it predicts only the probability of finding an atomic particle somewhere. When and where particles would appear - to an observer or to an observing system like a photographic plate - was completely and irreducibly random, he said. Born credited Einstein for the idea that the relationship between waves and particles is that the waves give us the probability of finding a particle, but this "statistical interpretation" of the wave function came to be known as "Born's Rule.".

Einstein had seen clearly for many years that quantum transitions involve chance, that quantum jumps are random, but he did not want to believe it. Although the Schrödinger equation of motion is itself continuous and deterministic, it is impossible to restore continuous deterministic behavior to material particles and return physics to strict causality. Even more than Einstein, Schrödinger hated this idea and never accepted it, despite the great success of quantum mechanics, which today uses Schrödinger's wave functions to calculate Heisenberg's matrix elements for atomic transition probabilities and all atomic properties.

"[That discontinuous quantum jumps]...offer the greatest conceptual difficulty for the achievement of a classical theory is gradually becoming even more evident to me."...[yet] today I no longer like to assume with Born that an individual process of this kind is "absolutely random." i.e., completely undetermined. I no longer believe today that this conception (which I championed so enthusiastically four years ago) accomplishes much. From an offprint of Born's work in the Zeitsch f. Physik I know more or less how he thinks of things: the waves must be strictly causally determined through field laws, the wavefunctions on the other hand have only the meaning of probabilities for the actual motions of light- or material-particles." Why did Schrödinger not simply welcome Born's absolute chance? It provides strong evidence that Boltzmann's assumption of chance in atomic collisions (molecular disorder) was completely justified. Boltzmann's idea that entropy is statistically irreversible depends on microscopic irreversibility. Exner thought chance is absolute, but did not live to see how fundamental it was to physics. And the early Epicurean idea that atoms sometimes "swerve" could be replaced by the insight that atoms are always swerving randomly - when they interact with other atoms and especially with radiation, as Einstein (reluctantly) found in 1916. Could it be that senior scientists like Max Planck and Einstein were so delighted with Schrödinger's work that it turned his head? Planck, universally revered as the elder statesman of physics, invited Schrödinger to Berlin to take Planck's chair as the most important lecturer in physics at a German university. And Schrödinger shared Einstein's goal to develop a unified (continuous and deterministic) field theory. Schrödinger won the Nobel prize in 1933. But how different our thinking about absolute chance would be if perhaps the greatest theoretician of quantum mechanics had accepted random quantum jumps in 1926? In his vigorous debates with Neils Bohr and Werner Heisenberg, Schrödinger attacked the probabilistic Copenhagen interpretation of his wave function with a famous thought experiment (which was actually based on another Einstein suggestion) called Schrödinger's Cat. Schrödinger was very pleased to read the Einstein-Podolsky-Rosen paper in 1935. He immediately wrote to Einstein in support of an attack on Bohr, Born, and Heisenberg and their "dogmatic" quantum mechanics."I was very happy that in the paper just published in P.R. you have evidently caught dogmatic q.m. by the coat-tails...My interpretation is that we do not have a q.m. that is consistent with relativity theory, i.e., with a finite transmission speed of all influences. We have only the analogy of the old absolute mechanics . . . The separation process is not at all encompassed by the orthodox scheme.'Einstein had said in 1927 at the Solvay conference that nonlocality (faster-than-light signaling between particles in a space-like separation) seemed to violate relativity in the case of a single-particle wave function with non-zero probabilities of finding the particle at more than one place. What instantaneous "action-at-a-distance" prevents particles from appearing at more than one place, Einstein oddly asked. In his 1935 EPR paper, Einstein cleverly introduced two particles instead of one, and a two-particle wave function that describes both particles. The particles are identical, indistinguishable, and with indeterminate positions, although EPR wanted to describe them as widely separated, one "here" and measurable "now" and the other distant and to be measured "later." Here we must explain the asymmetry that Einstein, and Schrödinger, have mistakenly introduced into a perfectly symmetric situation, making entanglement such a mystery. Schrödinger challenged Einstein's idea that two systems that had previously interacted can be treated as separated systems, and that a two-particle wave function ψ12 can be factored into a product of separated wave functions for each system, ψ1 and ψ2. Einstein called this his "separability principle (Trennungsprinzip). The particles cannot separate, until another quantum interaction separates them. Schrödinger published a famous paper defining his idea of "entanglement" in August of 1935. It began:When two systems, of which we know the states by their respective representatives, enter into temporary physical interaction due to known forces between them, and when after a time of mutual influence the systems separate again, then they can no longer be described in the same way as before, viz. by endowing each of them with a representative of its own. I would not call that one but rather the characteristic trait of quantum mechanics, the one that enforces its entire departure from classical lines of thought. By the interaction the two representatives (or ψ-functions) have become entangled.In the following year, Schrödinger looked more carefully at Einstein's assumption that the entangled system could be separated enough to be regarded as two systems with independent wave functions: Years ago I pointed out that when two systems separate far enough to make it possible to experiment on one of them without interfering with the other, they are bound to pass, during the process of separation, through stages which were beyond the range of quantum mechanics as it stood then. For it seems hard to-imagine a complete separation, whilst the systems are still so close to each other, that, from the classical point of view, their interaction could still be described as an unretarded actio in distans. And ordinary quantum mechanics, on account of its thoroughly unrelativistic character, really only deals with the actio in distans case. The whole system (comprising in our case both systems) has to be small enough to be able to neglect the time that light takes to travel across the system, compared with such periods of the system as are essentially involved in the changes that take place...Schrödinger described the puzzle of entanglement in terms of what one can answer to questions about the two entangled particles, which set an unfortunate precedent of explaining entanglement in terms of knowledge (epistemology) about the entangled particles rather than what may "really" be going on (ontology). He wrote: the result of measuring p1 serves to predict the result for p1 and vice versa. But of course every one of the four observations in question, when actually performed, disentangles the systems, furnishing each of them with an independent representative of its own. A second observation, whatever it is and on whichever system it is executed, produces no further change in the representative of the other system. Yet since I can predict either x1 or p1 without interfering with system No. 1 and since system No. 1, like a scholar in examination, cannot possibly know which of the two questions I am going to ask it first: it so seems that our scholar is prepared to give the right answer to the first question he is asked, anyhow. Therefore he must know both answers; which is an amazing knowledge.Schrödinger says that the entangled system may become disentangled (Einstein's separation) and yet some perfect correlations between later measurements might remain. Note that the entangled system could simply decohere as a result of interactions with the environment, as proposed by decoherence theorists. The perfectly correlated results of Bell-inequality experiments might nevertheless be preserved, depending on the interaction. And of course they will be separated by a measurement of either particle, for example, by Alice or Bob in the case of Bell's Theorem.

Bell's Theorem

Following David Bohm's version of EPR, John Bell considered two spin-1/2 particles in an entangled state with total spin zero. We can rewrite Schrödinger's separation equation above as

| ψ > = (1/√2) | + - > - (1/√2) | - + >

This is a superposition of two states, either of which conserves total spin zero. The minus sign ensures the state is anti-symmetric, changing sign under interchange of identical electrons.

Schrödinger does not mention conservation principles, the deep reason for the perfect correlations between various observables, i.e., conservation of mass, energy, momentum, angular momentum, and in Bell's case spin.

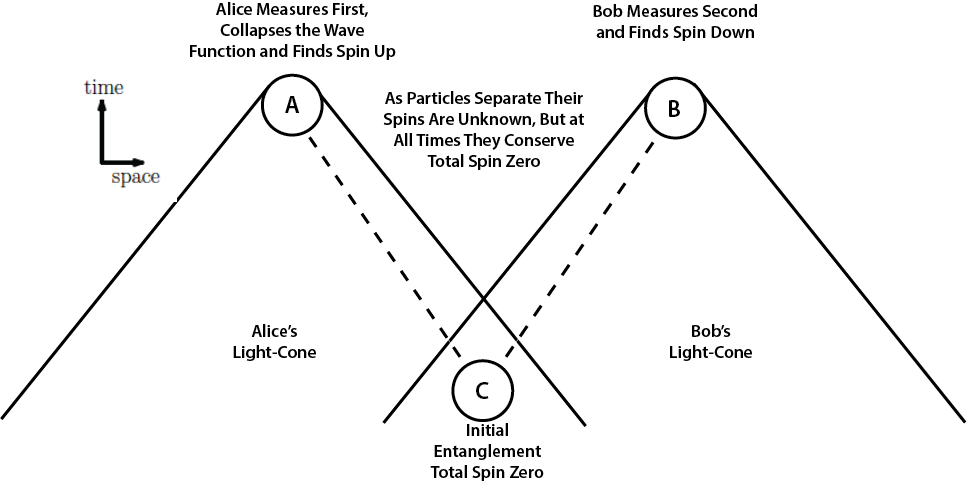

Let's assume that Alice makes a measurement of a spin component of one particle, say the x-component. First, her measurement projects the entangled system into either the | + - > or | - + > state. Alice randomly measures + (spin up) or - (spin down). A succession of such measurements produces the bit string with "true randomness" that is needed for use as a quantum key code in quantum cryptography. Whenever Alice measures spin up, Bob measures spin down, but that is if and only if he measures the same x-component. If Bob measures at any other angle, the perfect anti-correlation that distributes quantum key pairs to Alice and Bob would be lost. Whether a calcium cascade or a spontaneous parametric down-conversion, at initial entanglement the spins of two particles are (causally) projected into a singlet state whose wave function is spherically symmetric with total spin zero. It has no preferred spatial direction. Two locally causal interactions inside the initial entanglement apparatus project the entangled particles into a spherically symmetric two-particle quantum state ΨAB that Erwin Schrödinger said cannot be represented as a simple product of independent single-particle states ΨAΨB.  As the particles travel away from the central apparatus C in opposite directions toward measurement devices at A and B the two-particle wave function is in a spherically symmetric singlet state with total spin angular momentum zero.

The wave function is a linear combination or superposition of ΨA ↑ΨB ↓ + ΨA ↓ΨB ↑.

The total spin zero is conserved as a constant of the motion until a locally causal interaction at either A or B collapses the two-particle wave function, decohering it into a product of single-particle states, either ΨA ↑ΨB ↓ or ΨA ↓ΨB ↑. The constant of the motion is not a locally causal process like the initial entanglement and the separate final measurements, but it is a condition or constraint that puts limits on the measurement outcomes. Schrödinger says the final measurement disentangles the particles. The particles remain correlated, but measurement of one will no longer affect the other, he said.

As long as final measurements are made at the same pre-agreed upon angle their planar symmetry will maintain the initial symmetry, conserving our constant of the motion total spin zero. The particles will produce perfectly correlated opposite spin states, either up-down or down-up. Individual spin states will be randomly up or down.

However, should measurements at A and B not be made in the same plane, if measurement angles differ by angle θ, perfect correlations will be reduced by cos2θ, as quantum mechanics predicts. We note that no information is communicated (at any speed) between A and B. The two bits of information are locally created at A and at B, distally caused by the initial entanglement at C. These bits of information did not exist as the particles were in transit to A and B. They were proximally caused by the locally causal measurements at A and at B.

Bell's inequality was a study of how these perfect correlations fall off as a function of the angle between measurements by Alice and Bob. Bell predicted local hidden variables would produce a linear function of this angle, whereas, he said, quantum mechanics should produce a dependence on the cosine of this angle. As the angle changes, admixtures of other states will be found, for example | + + > in which Bob also measures spin up, or one where Bob detects no particle.

Bell wrote that "Since we can predict in advance the result of measuring any chosen component of σ2, by previously measuring the same component of σ1, it follows that the result of any such measurement must actually be predetermined."

But note that these values were not determined (they did not even exist according to the Copenhagen Interpretation) before Alice's measurement. According to Werner Heisenberg and Pascual Jordan, the spin components are created by the measurements in the x-direction. According to Paul Dirac, Alice's random x-component value depended on "Nature's choice."

As the particles travel away from the central apparatus C in opposite directions toward measurement devices at A and B the two-particle wave function is in a spherically symmetric singlet state with total spin angular momentum zero.

The wave function is a linear combination or superposition of ΨA ↑ΨB ↓ + ΨA ↓ΨB ↑.

The total spin zero is conserved as a constant of the motion until a locally causal interaction at either A or B collapses the two-particle wave function, decohering it into a product of single-particle states, either ΨA ↑ΨB ↓ or ΨA ↓ΨB ↑. The constant of the motion is not a locally causal process like the initial entanglement and the separate final measurements, but it is a condition or constraint that puts limits on the measurement outcomes. Schrödinger says the final measurement disentangles the particles. The particles remain correlated, but measurement of one will no longer affect the other, he said.

As long as final measurements are made at the same pre-agreed upon angle their planar symmetry will maintain the initial symmetry, conserving our constant of the motion total spin zero. The particles will produce perfectly correlated opposite spin states, either up-down or down-up. Individual spin states will be randomly up or down.

However, should measurements at A and B not be made in the same plane, if measurement angles differ by angle θ, perfect correlations will be reduced by cos2θ, as quantum mechanics predicts. We note that no information is communicated (at any speed) between A and B. The two bits of information are locally created at A and at B, distally caused by the initial entanglement at C. These bits of information did not exist as the particles were in transit to A and B. They were proximally caused by the locally causal measurements at A and at B.

Bell's inequality was a study of how these perfect correlations fall off as a function of the angle between measurements by Alice and Bob. Bell predicted local hidden variables would produce a linear function of this angle, whereas, he said, quantum mechanics should produce a dependence on the cosine of this angle. As the angle changes, admixtures of other states will be found, for example | + + > in which Bob also measures spin up, or one where Bob detects no particle.

Bell wrote that "Since we can predict in advance the result of measuring any chosen component of σ2, by previously measuring the same component of σ1, it follows that the result of any such measurement must actually be predetermined."

But note that these values were not determined (they did not even exist according to the Copenhagen Interpretation) before Alice's measurement. According to Werner Heisenberg and Pascual Jordan, the spin components are created by the measurements in the x-direction. According to Paul Dirac, Alice's random x-component value depended on "Nature's choice."

Quantum Jumping

In 1952, Schrödinger wrote two influential articles in the British Journal for the Philosophy of Science denying quantum jumping. These papers greatly influenced generations of quantum collapse deniers, including John Bell, John Wheeler, Wojciech Zurek, and H. Dieter Zeh.

On Determinism and Free Will

In Schrödinger's mystical epilogue to his essay What Is Life? (1944), he "proves God and immortality at a stroke" but leaves us in the dark about free will.

As a reward for the serious trouble I have taken to expound the purely scientific aspects of our problem sine ira et, studio, I beg leave to add my own, necessarily subjective, view of the philosophical implications. Order, Disorder, and Entropy

Chapter 6 of What Is Life?

Normal | Teacher | ScholarNec corpus mentem ad cogitandum, nec mens corpus ad motum, neque ad quietem, nec ad aliquid (si quid est) aliud determinare potent.' SPINOZA, Ethics, Pt III, Prop.2 |